Resources and links documenting Tol's 24 errors

Yesterday, we published a list of 24 errors in Tol's critique of our consensus paper Quantifying the consensus on anthropogenic global warming in the scientific literature. The short URL for our 24 errors report, handy for tweeting and posting in comments, is:

Yesterday, we published a list of 24 errors in Tol's critique of our consensus paper Quantifying the consensus on anthropogenic global warming in the scientific literature. The short URL for our 24 errors report, handy for tweeting and posting in comments, is:

http://sks.to/24errors

In addition, the Global Change Institute at The University of Queensland issued a statement summarising our response to Tol (2014) including a scholarly version of the 24-errors report (e.g., the same content but without all the bright, shiny boxes).

Today, a link to our reply to Tol made it onto the homepage of Reddit Science, causing our website traffic to surge to 20 times its normal level (so apologies for the sluggish server performance earlier today).

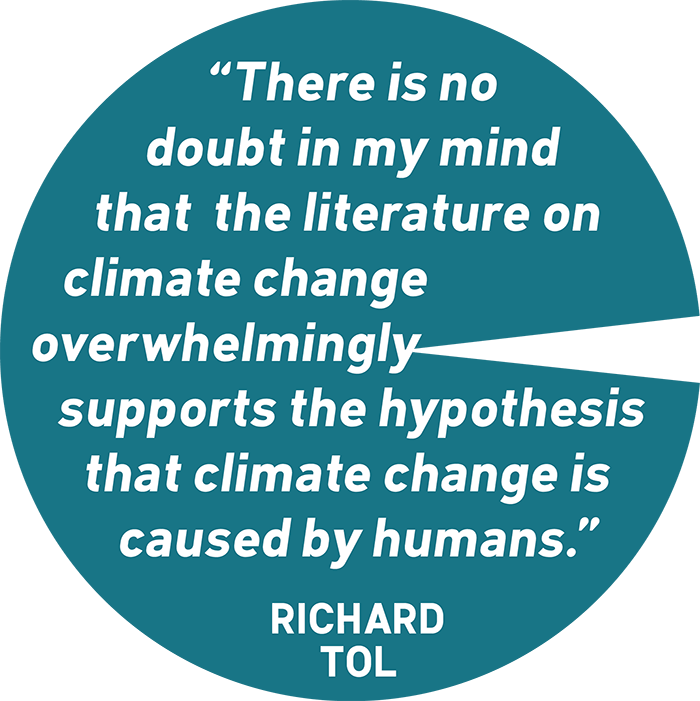

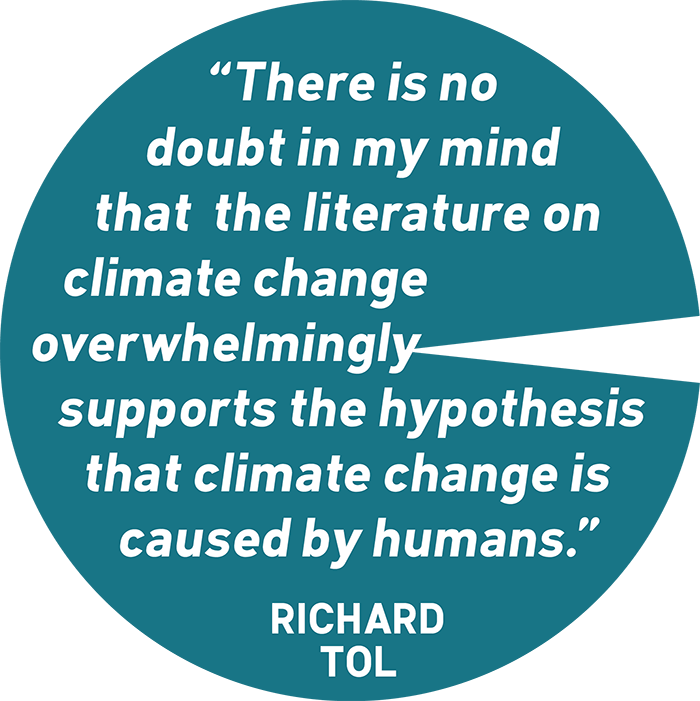

One of the eyebrow raising elements to Tol (2014) is that his analysis still finds an overwhelming consensus on human-caused global warming. This is significant given a recent George Mason University survey found only 12% of Americans know more than 90% of climate scientists agree on human-caused global warming.

Consequently, it's worth reminding people of Tol's views on consensus, expressed in Tol (2014). I've added a freely shareable graphic to our resource of consensus graphics, featuring an excerpt from Tol (2014). Everyone is encouraged to retweet or republish the graphic which is freely available under creative commons:

Other comments on Tol (2014)

There have been a number of articles published in response to Tol (2014) that offer some interesting perspectives on the various critical errors in Tol's paper.

Greg Laden at Science Blogs characterises Tol's paper concisely:

Tol is practicing a special kind of science denialism here, sometimes called “seeding doubt” or as I prefer it, “casting seeds of doubt on infertile ground.” In other contexts this is called “concern trolling” or the “You’re not helping” gambit.

Following this line of thought, Econnexus wonders about Tol's apparent concern that our consensus paper is polarizing:

What's the point of "further polariz[ing] the climate debate" by publicly picking holes in the methodology of a paper when you broadly agree with its conclusions and you yourself imply that you are concerned about said polarization, particularly when your critique has itself been criticised by a number of independent referees?

Small Epiphanies by Graham Wayne wonders how a paper that purports to be irrelevant to energy policy was accepted by a journal about energy policy:

“Energy Policy is an international peer-reviewed journal addressing the policy implications of energy supply and use from their economic, social, planning and environmental aspects…” insists the journal. Why would they publish a paper so clearly outside their expertise? More curious is that before Energy Policy agreed to publish it, ERL and several other journals rejected previous drafts. The reasons for the rejection were as copious as they were blunt.

And Then There's Physics slow claps Richard Tol's destructive comment:

The Consensus Project was, essentially, a citizen science project. I know some of those involved work at universities, but most were simply volunteers who helped to rate abstracts so as to establish what fraction of those that took a position with respect to AGW, endorsed AGW. The resulting paper was one of the papers of 2013 that received the most online attention. In any other field this would be seen as a remarkable success. A citizen science project publishes a paper that has more impact than almost any other paper published that year. Not in climate science, though. In climate science you have to attack and attempt to destroy anything that goes against the narrative that you’d like to control. Personally, I find it depressing that this is the manner in which some choose to conduct themselves and – in some sense – this simply seems like another illustration of how poor the general dialogue is in the climate science debate.

Critical Angle by Andy Skuce discusses how Andy's own private correspondence was earlier stolen by an unknown hacker and subsequently his own words were quoted out-of-context by Richard Tol:

Putting aside, for now, the shabby ethics of citing stolen material, this interpretation of what I said is false. The quote was part of a discussion that a couple of us working on the project were having about our impressions that some of the abstracts in the sample were repeated. We were not sure if those impressions were real or whether they arose from having read hundreds of abstracts over several days and not remembering each one exactly. We had no way of checking the database for ourselves and we alerted John Cook so that he could investigate further. The notion that I was concerned about the effect of “fatigue ” on the quality of my work is quite false and this is obvious to anyone who reads the quote in context.

I will be asking the editor of Energy Policy to correct this error and to remove any references to stolen private discussions in Professor Tol’s paper. It is surprising and disappointing that the reviewers and editorial staff of the journal did not spot this.

Real Sceptic examines Tol's suggestions of rater fatigue and how the actual expert Tol cites says the opposite to Tol's claims:

...this whole point about fatigue is nonsense. What Tol is referring to is survey fatigue, the tendency of people to quit or get less accurate when they fill in long surveys or a lot of surveys. But this was a team of raters who were free to rate abstracts at their leisure, they could start whenever they wanted, continue as fast or as slow as they wanted, and could take a break when they wanted. There wasn’t a deadline for submission or for finishing the ratings. This is a similar method as used by Oreskes 2004 which Tol refers to as one of the “excellent surveys of the relevant literature.”

You also expect raters to become more proficient in these type of situations. The author of the book that Tol cited to make his fatigue point confirmed that this is the case for this set up. If this wasn’t the case there wouldn’t be a 97% consensus from the abstract ratings and then also a 97% consensus from the authors rating their own papers.

Hot Whopper attempts to do a better job than us at explaining Tol's error in conjuring 300 non-existent rejection abstracts out of thin air:

The key point is that where there were differences between the raters and a third person had the final say, most papers that were changed from one category to another went from:

- explicit endorsement of AGW to implicit endorsement of AGW or

- implicit endorsement of AGW to no position on AGW or vice versa.

Contrary to what Richard postulated, there was not a huge shift from AGW endorsement or "no position" to rejection of AGW.

SkepticalScience has a couple of charts illustrating this in detail with numbers. Here's a simplified illustration as an animation to show what Richard's blunder resulted in.

In other words, where there was a shift in the category of an abstract, it just shifted slightly in the categorisation (mainly from explicit to implicit endorsement, or implicit to no position or vice versa) - and if anything the researchers tended to err on the conservative side if there was a marginal decision. There were hardly any abstracts that were reclassified from "no position" to rejection let alone from endorsement to rejection. Dana's diagram shows that out of 595 reclassified abstracts, only five papers would have gone from "no position" to "rejection of AGW" and only one paper from endorse to reject.

Rabett Run reports a paper by an Anonymous Scientist (why anonymous? Google "Tol Ackerman") that cuts to the heart of one of the critical flaws in Tol (2014):

...the assumption that the remaining differences are equally distributed among categories is more than problematic. It is WRONG and the data showing that it is WRONG have been made public.

I think we'll hear a lot more about this paper by the Anonymous Scientist. What the paper finds is that even if you reverse the changes we made during our reconciliation process, Tol's method still decreases the consensus. Tol has created the perfect climate denial machine: it reduces the consensus even if you reverse the data fed into it:

Most of the reduction in the consensus percentage is not due to the trend in shifts applied during reconciliation, but rather an artifact of the method itself.

The Global Change Institute at The University of Queensland issued a statement on our response to the Tol paper:

The University is committed to the publication of thoroughly researched, internationally peer-reviewed academic articles, without fear or favour resulting from that publication. As a result, the co-authors of the paper in Environmental Research Letters have examined the claims in the latest article published in Energy Policy and have identified 24 issues which can be viewed in an online response here.

These errors invalidate the criticisms of the Energy Policy article and lead to a strengthening of the original conclusions in the Cook et al. publication.

The University is committed to the publication of thoroughly researched, internationally peer-reviewed academic articles, without fear or favour resulting from that publication. As a result, the co-authors of the paper in Environmental Research Letters have examined the claims in the latest article published in Energy Policy and have identified 24 issues which can be viewed in an online response here.

These errors invalidate the criticisms of the Energy Policy article and lead to a strengthening of the original conclusions in the Cook et al. publication.

- See more at: http://www.gci.uq.edu.au/news/uq-researcher-responds-to-criticism-of-research#sthash.HVJdHziM.dpuf

Lastly, I think the final word should go to Environmental Research Letters (ERL) whose expert reviewers rejected Tol's paper around a year ago. You can read the full list of 24 errors identified by the expert reviewers at ERL. Those errors remain in the paper accepted by Energy Policy. Here is a quote from the ERL review:

This section is not supported by the data presented and is also not professional and appropriate for a peer-reviewed publication. Furthermore, aspersions of secrecy and holding data back seem largely unjustified, as a quick google search reveals that much of the data is available online (https://skepticalscience.com/tcp.php?t=home), including interactive ways to replicate their research. This is far more open and transparent than the vast majority of scientific papers published. In fact, given how much of the paper’s findings were replicated and checked in the analyses here, I would say the author has no grounds to cast aspersions of data-hiding and secrecy.

Posted by John Cook on Friday, 6 June, 2014

Yesterday, we published a list of 24 errors in Tol's critique of our consensus paper Quantifying the consensus on anthropogenic global warming in the scientific literature. The short URL for our 24 errors report, handy for tweeting and posting in comments, is:

Yesterday, we published a list of 24 errors in Tol's critique of our consensus paper Quantifying the consensus on anthropogenic global warming in the scientific literature. The short URL for our 24 errors report, handy for tweeting and posting in comments, is: