11 Characteristics of Pseudoscience

Posted on 10 August 2021 by Guest Author

This is a re-post from the Thinking is Power website maintained by Melanie Trecek-King where she regularly writes about many aspects of critical thinking in an effort to provide accessible and engaging critical thinking information to the general public.

This is a re-post from the Thinking is Power website maintained by Melanie Trecek-King where she regularly writes about many aspects of critical thinking in an effort to provide accessible and engaging critical thinking information to the general public.

Throughout most of our history, humans have sought to understand the world around us. Why do people get sick? What causes storms? How can we grow more food? Unfortunately, until relatively recently our progress was limited by our faulty perceptions and biases.

Modern science was a game changer for humanity. At its core, science is a way of learning about the natural world that demands evidence and logical reasoning. The process is designed to identify and minimize our biases. Scientists follow evidence wherever it leads, regardless of what they want (or don’t want) to be true. Scientific knowledge progresses by weeding out bad ideas and building on good ones. We owe much of the increase in the quality and quantity of our lives over the last century to scientific advancements.

It’s no wonder then that people trust science. The problem is, many don’t understand how science works and what makes it reliable, leaving them vulnerable to claims that seem scientific…but aren’t. By cloaking itself in the trappings of science, pseudoscience appeals to the part of us that recognizes science is a reliable way of knowing. But pseudoscience doesn’t adhere to science’s method. It’s masquerading. It’s cheating.

It’s easy to be misled by pseudoscience because we often want to believe. One of the defining features of pseudoscience is that it starts with the desired conclusion in mind and works backwards to find evidence to justify the belief. It would be incredible if the Loch Ness monster existed. Or if the planets and stars could predict our future. Or if we could talk to our dead loved ones. Pseudoscience also plays on many of our biases, making it easy for us to believe. We know our healthcare system has problems, so “natural” and “ancient” cures offer enticing alternatives. And when modern medicine doesn’t have the answers we need it’s easy to fall for false hope.

But no matter how awe-inspiring the claims, or how much we wish them to be true, pseudoscience isn’t science. And putting our trust in pseudoscience can be harmful to our pocketbooks…and our health.

Instead, learn to spot the characteristics of pseudoscience and protect yourself from being misled or fooled.

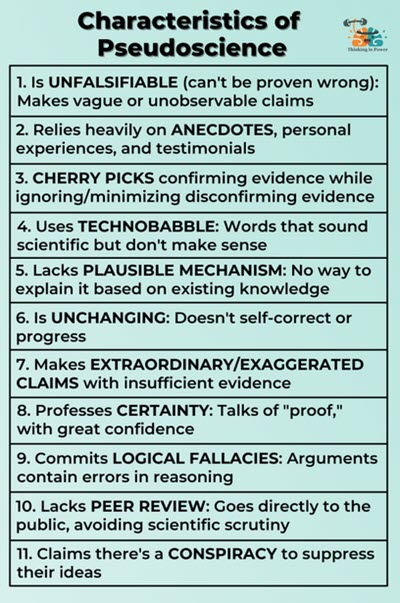

11 CHARACTERISTICS OF PSEUDOSCIENCE

1. Is unfalsifiable: It can’t be proven wrong.

The process of science involves proposing falsifiable claims and then trying to prove them wrong. If no testing or observation could ever disprove a claim, it’s not science. Basically, evidence matters – and unfalsifiable claims are invulnerable to evidence.

Pseudoscience often makes claims that aren’t falsifiable, such as those that are too vague to be tested and measured. For example, a detox foot pad claims it “promotes vibrant health and wellness,” and a supplement bottle says it “boosts the immune system.” Or a horoscope, which claims to use the science of astrology, says you should “avoid making any important decisions” and that “today is a good day to dream.”

Other forms of pseudoscience make supernatural claims that cannot be tested. By definition, the supernatural is above and beyond what is natural and observable and therefore isn’t falsifiable. For example, so-called “energy medicine,” such as reiki and acupuncture, claims that illnesses are caused by unmeasurable out-of-balance “energy fields” that flow along undetectable meridians…but that can be “adjusted” to restore health.

When pseudoscientific claims fail, ad hoc excuses often provide the last line of defense against falsifiability. Why was the psychic wrong? Blame the spirits. Or your memory. Why didn’t veganism and prayer cure your cancer? You ate that hamburger. And conspiracy theorists are masters at immunizing their beliefs against evidence: Evidence that doesn’t support the theory was “planted” and missing evidence was “covered up.”

2. Relies heavily on anecdotes: The evidence largely comes from personal experiences and testimonials.

Most of us think a personal experience is the best way to know if something is true. But anecdotes are terrible evidence. We don’t realize how easily we can misperceive our experiences. Plus, anecdotes aren’t controlled, so it’s difficult to know why something happened. Our biases and limited perceptions are precisely why we need carefully controlled scientific studies.

Yet the lifeblood of pseudoscience is the anecdote. Testimonials are easy and cheap. And by offering vivid and emotional stories, proponents can persuade without evidence. Who needs a body to prove Bigfoot exists when there’s a compelling story about a sighting?

Alternative treatments love to use testimonials, featuring customers who claim essential oils “treated” their headaches or magnetic bracelets “cured” their back pain. But for these claims to be scientific, researchers would need to control for other factors and compare the products to a placebo in a randomized, double-blinded controlled trial.

While these stories can be very convincing, there’s likely a reason your doctor doesn’t prescribe crystals or homeopathy. When pseudoscientific remedies are actually tested, they perform no better than placebos.

3. Cherry picks evidence: Uses favorable evidence while ignoring or minimizing disconfirming evidence.

It’s human nature to look for evidence that supports what you believe. And if you’re looking hard enough you will find it! One of the reasons science is so successful is that it’s designed to root out confirmation bias. Remember, scientists search for truth by trying to prove themselves wrong, not right.

The process of science is messy. Different types of studies provide different types and qualities of evidence. Findings that are replicated have stronger validity. When various lines of research converge on a conclusion, we can be more confident that the conclusion is trustworthy. This body of evidence is much more reliable than any individual study.

Pseudoscience is built on confirmation bias. Instead of looking at all of the evidence, it cherry picks individual studies, or even experts, to support the desired conclusion. And sometimes it’s very slim pickings, as the only studies to choose from are such low quality they failed to pass peer review in respectable scientific journals. They may have even been retracted.

A textbook example is Andrew Wakefield’s 1998 observational study of 12 children that claimed to have found a link between the MMR (measles, mumps, rubella) vaccine and autism. Not only have countless studies since then, involving millions of children, conclusively disproved the link, it was discovered that Wakefield forged his data as part of a scheme to profit off of a new vaccine. To make the case that the MMR vaccine causes autism, this retracted study is cherry picked and the rest of the body of evidence is ignored or minimized.

Each study is a piece of a puzzle. In science you have to look at the whole puzzle, not pick and choose the evidence that supports what you want to believe.

4. Uses technobabble: Words that sound scientific but are used incorrectly or don’t make sense.

To provide legitimacy to claims, pseudoscience mimics the language of science. All scientific fields have technical jargon that helps experts communicate complex concepts with accuracy and nuance to other experts. But most people don’t have the expertise to understand these terms and assume those using them know what they’re talking about. Jargon makes sense to experts. Technobabble doesn’t.

Pseudoscience is awash in technobabble. For example, a “Bio electric field enhancement technology foot and hand bath” (being sold online for $3,000) claims that “sine-wave filtered auditory stimulation is carefully designed to encourage maximal orbitofrontal dendritic development.” That may sound impressive, but it’s meaningless. (I couldn’t have made that up if I wanted to.)

5. Lacks a plausible mechanism: There’s no way to explain how the claim might work based on existing knowledge.

Today’s body of scientific knowledge is the product of decades, if not centuries, of progress. While it’s possible a break-through discovery could upend what we know about the laws and theories of modern science, it’s not likely. The novelty of pseudoscientific claims might be alluring, but if there was evidence available to support them they’d be science…not pseudoscience.

Many pseudoscientific claims lack a plausible way of explaining how they would work. For example, homeopathy is based on the premise that the more a substance is diluted, the stronger it is. Most homeopathic remedies are diluted to the point that there is literally none of the supposedly therapeutic substance left. Homeopaths explain this violation of the laws of chemistry and physics with the even more implausible idea that the water “remembers” the substance.

6. Resists change: Doesn’t self-correct or progress.

The process of science is designed to weed out bad ideas and build on good ones. It discovers errors by actively searching for disconfirming evidence. Scientists change their mind with evidence, and that’s a good thing!

For centuries, doctors thought diseases were caused by imbalances of the four humors, or body liquids: water, blood, black bile and yellow bile. The treatment? Bloodletting and vomiting. In the 19th century, Austrian physician Joseph Dietl found that pneumonia patients who received treatment had mortality rates three times higher than those who received no treatment. Scientific progress is why your doctor doesn’t cut you when you’re sick.

Conversely, pseudoscience resists change, often despite being refuted. Consider iridology, which claims to be able to diagnose all diseases by the pattern of colors in the iris. As the story goes, in the 1840s, Hungarian physician Ignatz von Peczely’s owl broke its leg. Peczely noticed the flecks of color in the bird’s eye before and after its leg healed, and from this observation iridology was born. Despite the claims having no plausible mechanism and being thoroughly disproven, the pseudoscience of iridology lives on.

7. Makes extraordinary/exaggerated claims: Promises extraordinary benefits with insufficient evidence.

Scientists are open to all claims, but extraordinary claims require extraordinary evidence.

Reality is complex, and understanding and addressing our problems is no exception. Sometimes modern science doesn’t have the answers we’re looking for. And in our desperate need for hope, pseudoscience offers easy and simple solutions.

For example, a fad diet says it helps dieters lose weight quickly, above what is biologically possible (or safe)…without dieting or exercise! And the Miracle Mineral Solution claims it can replace up to 80% of all pharmaceuticals, and can cure HIV, malaria, autism, and cancer (among others). Its secret? Industrial strength bleach.

If it sounds too good to be true, it just might be pseudoscience.

8. Professes certainty: Talks of “proof” and presents ideas with complete confidence.

One of the common misconceptions about science is that it proves ideas. All scientific conclusions are tentative. Something is “true” only as long as the evidence supports it and when the evidence changes so does our knowledge. The more evidence for an idea the more reliable it is, and ideas that are supported by many lines of evidence are unlikely to change. But science does not provide absolute proof or absolute certainty; instead, science is a process of reducing uncertainty.

To some, this uncertainty is uncomfortable. The fact that scientists are always “changing their minds” must mean they can’t be trusted and the tentative language scientists use to communicate must mean they don’t know what they’re talking about.

Pseudoscience, on the other hand, presents ideas simply and with great confidence, making it attractive to those seeking certainty. Whereas your doctor may tell you a test “suggests” a certain diagnosis, and “possible treatments may have certain benefits” but that “there’s a chance of some side effects,” a pseudoscientist would counter with something like “you have x” and “all you need to do” is use whatever product or service they’re selling.

If you want to get closer to understanding reality as it is, learn to embrace the uncertainty of knowledge.

9. Commits logical fallacies: Arguments contain errors in reasoning.

Logical fallacies are flaws in reasoning that weaken or invalidate an argument. Unfortunately, they can be quite persuasive, something that pseudoscience promoters know all too well.

While there are about a gazillion logical fallacies, the following are very common in pseudoscience.

Appeal to nature: Argues that something is good because it’s natural or bad because it’s unnatural.

A very common, but false, belief is that “natural” means safer. Nature is full of things that can harm or kill you, from typhoons to viruses to sharks, and human advancements, such as treated drinking water and antibiotics, have greatly improved our quality and quantity of life.

There’s also a misunderstanding of what “natural” even means. It doesn’t mean “chemical free”: literally everything is made of chemicals. And chemical isn’t a synonym for “toxin,” as anything is toxic at a high enough dose. Those are meaningless marketing words.

“Natural” also doesn’t mean better or more effective. A chemical’s source doesn’t determine its properties or its function, its structure does. For example, your body can’t tell the difference between a natural Vitamin C molecule and synthetic one.

The appeal to nature fallacy is ubiquitous in pseudoscience. Gwyneth Paltrow, who famously said, “I don’t think anything that is natural can be bad for you,” has built an entire company based on this fallacy. There’s “all natural” and “organic” skin creams, clothes, and even candles (that smell like her vagina). Homeopathy “works naturally” with your body. Ayurveda aids with “natural healing.” And of course you should stay away from the “harmful chemicals” sold by “Big Pharma” and buy “all natural” supplements instead.

Appeal to tradition: Asserts that something is good or true because it’s old.

A common assumption is that something that’s been around for a long time must be effective. But historical reasons aren’t sufficient. If something truly works, provide the evidence.

Many pseudosciences commit this fallacy. For example, naturopathy evolved out of “ancient” healing practices. Astrology has been used for “thousands of years.” The paleolithic diet says you should “eat like your ancestors.

Argument from ignorance: Asserts that something is true because we don’t know that it’s not.

In science, claims require evidence. Without evidence, there’s no reason to accept a claim…it simply means we don’t know.

Yet pseudoscientists often suggest that, because “science doesn’t know everything” or that “science can’t explain x,” their baseless claims must be true. This science-of-the-gaps fallacy, a version of the appeal to ignorance, implies that unanswered scientific questions justify their pseudoscience, despite the lack of evidence. But science’s lack of knowledge doesn’t mean something else must be true. It means we need to remain skeptical and demand sufficient evidence before accepting any claim.

Ad hominem: Attacks the source of the argument instead of the substance.

Personal attacks can be an effective way to discredit an argument. They appeal to our emotions and biases and can distract from the lack of a good argument.

The ad hominem can take many forms, from name-calling and insults, to attacking a person’s character, to questioning their motives, to calling them a hypocrite. For example, it’s common for health pseudoscience promoters to call medical doctors “shills” for industry or government, implying they can’t be trusted. Because unlike pseudoscience promoters, they’re obviously in it for the money!

A telling case is that of Paul Offit and Joseph Mercola. Mercola, an anti-vaccine alternative medicine promoter, often attacks Offit, one of the world’s foremost experts on vaccines, for being a “greedy shill.” Interestingly, Mercola’s online store, which is full of pseudoscience products, from supplements to vibration exercise equipment to organic bed linens, is worth over $100 million.

Pseudoscience promoters want us to believe that scientists are in it for the money but they’re in it for our benefit. Don’t fall for it.

The point is, scientific arguments must be logical. Illogical, fallacious arguments are a sign of pseudoscience.

10. Lacks adequate peer review: Avoids critical scrutiny by the scientific community.

Science is a community effort, of which peer review is an essential component. When a scientist completes a study, they write up their results and submit it to a journal for publication. The editors send the manuscript to other experts, who provide critical feedback, suggest edits and recommend whether or not the study is worthy of publication. Articles in scientific journals become part of scientific knowledge that other scientists build upon. While not perfect, peer review provides a crucial safeguard against error and fraud.

Pseudoscience bypasses peer review by presenting their claims directly to the public, via books, websites, social media, TV shows, etc. The media often uncritically repeat the claims, leaving the public unaware that they haven’t passed the necessary scrutiny by the scientific community. It doesn’t help that many pseudoscientists are very effective self-promoters…but charisma isn’t evidence.

Examples online abound. The website Natural News features headlines such as “Eggplant cures skin cancer” and “Has your DNA been altered by GMOs?”, while Vani Hari (AKA “Food Babe”) claims that the flu shot was used as a genocide tool and microwaved water produces a similar physical structure to water exposed to the words “Satan” and “Hitler.”

Since pseudoscience lacks the ability to pass peer review, some go the extra mile by making their own journals to mimic legitimate scientific ones. However, these journals are generally created for the sole purpose of confirming existing beliefs and are therefore not scientific. For example, the International Journal for Creation Research publishes papers that demonstrate the “young earth model, the global flood, the nonevolutionary origin of the species, and other evidences that correlate to the biblical accounts.”

Unfortunately, those without a science background may not be able to discern the difference between a pseudoscience journal and a genuine science journal.

11. Claims there’s a conspiracy to suppress their ideas: Criticism by the scientific community is a conspiracy

Remember that the process of science is designed to challenge ideas, not confirm them. Scientists design experiments to disprove their explanations. They present their results in journals and at conferences so other scientists can try to disprove them, too. This criticism is harsh, but necessary. Only ideas that are able to withstand this critical scrutiny of the scientific community are accepted.

Pseudoscientists exist outside of the scientific community because they don’t adhere to science’s method and their claims can’t stand up to scrutiny. But instead of trying to make their case with evidence they claim scientists are involved in a conspiracy to “suppress the truth.” Why else would nearly all experts reject their claims?

Many pseudoscientists present themselves as the lone hero scientist standing up to the evil establishment and fighting for the victims who have been prevented from hearing “the truth.” It’s the ultimate “get out of jail free” card for when the evidence doesn’t support your beliefs.

For example, Kevin Trudeau’s book “Natural cures ‘they’ don’t want you to know about” claims that there are “all natural” cures for nearly every illness, including cancer, that are being “hidden” and “suppressed.” The anti-vaccination documentary “Vaxxed: From cover-up to catastrophe” says Andrew Wakefield was right all along about the connection between the MMR vaccine and autism, but “they” (read: the entire scientific community and all related regulatory bodies) are involved in a nefarious plot to cover it up.

This assertion rests on a fundamental misunderstanding of science’s incentive structure: the best way to make a name for yourself as a scientist is to discover something previously unknown or to disprove a longstanding conclusion. A conspiracy by “Big Pharma” to cover up a “natural cure for cancer,” as many pseudoscientists claim, would require the cooperation of scientists in nearly every academic institution, industry, and government agency…in every country on earth. That’s an awfully juicy secret to keep, especially considering how many scientists have lost loved ones to cancer (or been diagnosed themselves). What would be the motive? Money? “Big Pharma” would certainly make money from curing cancer. Plus, this conspiracy would have to be global, and yet nearly every other country has socialized medicine. How would “Big Pharma” convince the governments of Germany and Sweden, for example, to spend more money while allowing their citizens to suffer and die?

Like most grand conspiracies, this one starts to unravel as soon as you start to pull on the thread.

THE BOTTOM LINE

Science is one of the most reliable ways of knowing. While there’s no one scientific method, science is essentially a community of experts using diverse methods to gather evidence and scrutinize claims. It self-corrects and progresses. It assumes it’s incomplete (or wrong!) so it never stops questioning and testing. The results speak for themselves.

The public rightly trusts science. Pseudoscience is pretending, convincing unsuspecting victims to trust it by free-riding on science’s coattails. But pseudoscience isn’t harmless: It can literally cost you your life.

Critical thinking and science literacy are empowering. They can help you make better decisions and live a better life. So learn to spot the characteristics of pseudoscience to avoid being fooled by science’s pretender.

To Learn More

Schmaltz, R., and Lilienfeld, SO. (2014). Hauntings, homeopathy, and the Hopkinsville Goblins: using pseudoscience to teach scientific thinking. Front. Psychol. 5:336. doi: 10.3389/fpsyg.2014.00336

Finn P, Bothe AK, Bramlett RE. (2005). Science and pseudoscience in communication disorders: criteria and applications. Am J Speech Lang Pathol.14(3):172-86. doi: 10.1044/1058-0360(2005/018). PMID: 1622966\9.

Fasce, A., & Ernst, E. (2018). Dismantling the rhetoric of alternative medicine. Smokescreens, errors, conspiracies, and follies. Mètode Science Studies Journal, 8. DOI: 10.7203/metode.8.10004

Special thanks to Jonathan Stea and Lynnie Bruce for their feedback

Arguments

Arguments

A good list of things to look for.

I note that more than once the phrases "good ideas" and "bad ideas" are compared. Of course, scientists know that this does not mean some sort of moral "good vs. evil" statement.

In science, "good ideas" are ones that allow us to make more accurate predictions of how things behave. "Bad ideas" are ones that fail to provide any improvement in our understanding or ability to anticipate what will happen. That's where testing comes in, and then revising our thinking when observations show that the "idea" wasn't helping.

Occam's razor argues that "entities should not be multiplied beyond necessity". Technobabble falls into the category of using sciency terms "beyond necessity" - making things sound impressive when really the ideas are not.

Is the technobabble designed to baffle you, or provide an accurate description? Properly-used technical terms help a speaker or writer be very specific about what they are saying - as long as the listener or reader knows what the terms mean. As part of a dialog, a speaker should be willing to explain unknown terms if they are speaking scientifically, If they don't - or can't - then it is more likely to be pseudo-science. I can't count the number of times in my life when someone has done the "oh, it's too hard to explain" to cover up the fact that they just don't know.

Thanks for the comment! I will admit I'm partial to technobabble, simply because it can be rather humorous. But to your point, I've noticed my students easily confuse technobabble with real scientific termonology that they don't understand. By definition technobabble is used to confuse people into thinking it says something profound when it's essentially meaningless. I like your solution, though, as it puts the burden back on the "babbler" to explain what it means.