Answering questions about consensus in a MOOC webinar

Posted on 25 January 2014 by John Cook

I was honoured to be invited as a guest lecturer for Climate Change in Four Dimensions, a Massive Open Online Course (MOOC) hosted at Coursera. This is a free, online course run by the University of San Diego, featuring two of my personal heroes: Richard Somerville and Naomi Oreskes. Week 2 featured some must-watch lectures by Naomi Oreskes on the nature of scientific knowledge. The required activity for that week involved reading the peer-reviewed paper authored by the Skeptical Science team, Quantifying the Consensus on Anthropogenic Global Warming in the Scientific Literature.

The webinar had students shooting questions to me about our consensus paper. The time seemed to pass all too quickly (sign of a good time) and a number of questions went unanswered. So I thought I would use this blog post to go through the webinar transcript and address the unanswered questions (the advantage of blogging is I can also bling up my answers with gratuitious infographics):

You said the authors "err[ed] on the side of least drama." Is this still a good idea?

To provide some background to this question, erring on the side of least drama (ESLD) suggests that rather than lean towards alarmism, scientists tend to be conservative and downplay their science. We discuss the evidence for this when looking at how the IPCC tend to underestimate climate impacts.

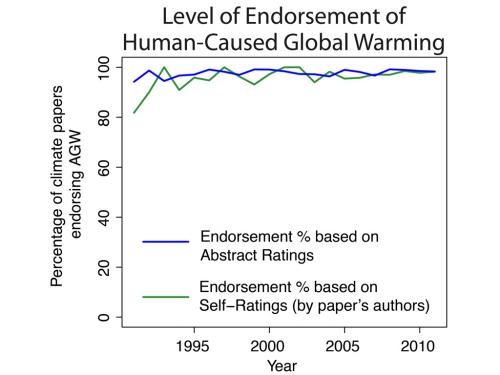

When deciding on the criteria for categorising climate papers, we took somewhat of a conservative approach in that if there was any doubt whether a paper was stating a position on anthropogenic global warming (AGW), we rated it as stating No Position on AGW. Did this significantly affect our results? Our method found that by rating abstracts, 97.1% of abstracts stating a position on AGW endorsed the consensus. When we asked scientists to rate their own papers, we found 97.2% of papers self-rated as stating a position on AGW endorsed the consensus.

Comparing the two independent results (97.1% versus 97.2%) is a powerful validation of our abstract rating result. Funnily enough, we've observed that critics of our paper tend to avoid the fact that 1,200 scientists rating their own papers obtained the same result as the Skeptical Science team. So while there may have been a slight tendency towards conservatism in our rating, I don't think it affected the results significantly.

Michael Crichton in his appendix asserted that scientific consensus on human eugenics proves that consensus is unreliable. Has this criticism acquired traction in Australia?

While I'm not an expert on the history of eugenics, my understanding of it is the "consensus" was a relatively widespread adoption of policies that applied the science of eugenics in social settings. The decision to use eugenics on humans was predominantly a question of values and ethics rather than of scientific evidence. Comparing this situation to the scientific consensus on AGW is comparing apples to oranges.

More generally, however, the question of whether consensus is a reliable indication of scientific truth is a good one. I recommend watching Naomi Oreskes' video lectures from Week 2 of this MOOC as she discusses this very question - how can we know the consensus is right? Confidence that you're standing on solid scientific ground increases when the consensus among the scientific community is built on a consilience of evidence. Scientists tend to be an argumentative bunch - they didn't earn the term 'herd of cats' for no reason. So when they do show overwhelming agreement, that's a strong indication that the evidence is all pointing in one direction. This is the case with human-caused global warming, with many human fingerprints on climate change.

I don't recall ever hearing the Crichton/eugenics argument used here in Australia.

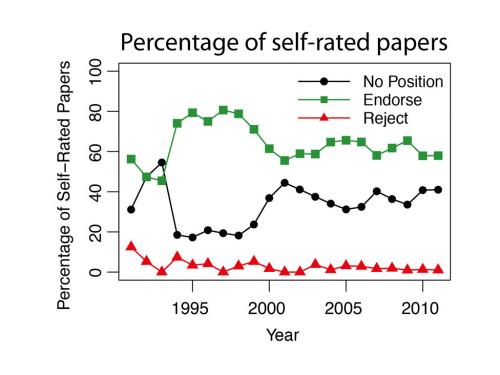

Regarding Figure 2(b), do you have any idea what happened between 1995 and 2000?

Here is Figure 2(b) from our paper. It shows the percentage of self-rated papers that endorse anthropogenic global warming (AGW), reject AGW or state no position.

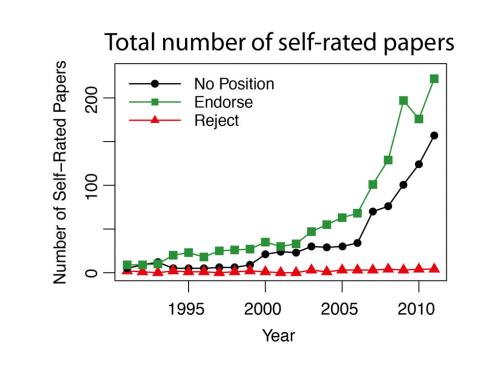

Notice the large variability prior to 1995 when the percentage of endorsement papers jump up dramatically, while the number of no position papers rise then fall. The clue to the dramatic variability in the early 1990s can be found in Figure 2(a). This is the "raw data" that Figure 2(b) derives from - the total number of papers that received self-ratings by the papers' authors.

Observe that there are very few self-rated papers in the early 1990s. Consequently, when you calculate percentages among these low quantities, you will see high variability in the percentages. The dramatic changes in percentages in the early 1990s are an artifact of small sampling.

The population studied was people who have published papers in a journal concerning climate change.. That seems to be a biased sample.

Well, yes, that's the whole point. We weren't measuring general public opinion about climate change. We wanted to obtain the level of agreement among scientists with expertise in climate change. Scientists who had published peer-reviewed paper on the subject of 'global climate change' or 'global warming' were assumed to possess some degree of expertise on the subject.

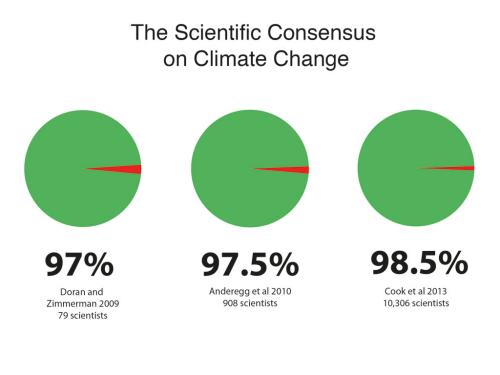

Of course, I should make the clarifying point that the "population" was peer-reviewed climate papers. We were predominantly interested in the state of published research, rather than polling scientists. This puts our analysis in the same vein as Naomi Oreskes' 2004 analysis of peer-reviewed papers, rather than surveys such as Doran and Zimmerman (2009).

Nevertheless, by determining which scientists authored endorsement vs rejection papers, we were able to also conduct an analysis similar to Anderegg et al (2010), which collated a database of scientists who had made public statements on climate change. We found that among scientists who had authored a paper stating a position on AGW, 98.4% of the scientists endorsed the consensus.

Why is this figure greater than 97.1% of climate papers? Because endorsement papers tended to have more co-authors than rejection papers.

Do you categorize the people giving consensus on the basis of qualification and experience

No, we didn't look at qualification or experience (other than to note the number of papers per scientist). But that is definitely an area worth further exploration.

Very interesting in your analysis is though that close to double as many in the self-rating process endorsed the consensus view compared to those having made the abstract rating process, whereas the opposite is the case regarding those papers being considered either to have no AGW (Anthropogenic Global Warming) opinion or being undecided compared to those doing the self-rating process. How will you comment on that?

The abstract rating only used the short text in the abstract (well, mostly short, some abstracts were excruciatingly long-winded) to determine whether a paper endorsed AGW. This obviously had its limitations - it didn't tell us whether the paper might have gone on to endorse AGW in the full paper (e.g., in the Introduction section). Our expectation was that many abstracts that were rated No Position on AGW would go on to endorse AGW in the full paper.

This was part of the motivation for surveying the scientists. We wanted to compare our abstract ratings to the ratings obtained from reading the full papers. When scientists rated their own papers, they would obviously be fully aware of everything they said in their own paper. So we considered the self-ratings a proxy for the endorsement level of the full paper. And asking the scientists to provide the self-ratings was a much more attractive option than reading thousands of full papers!

Our expectation that self-ratings would show more endorsements than abstract ratings was confirmed by the survey data. Amongst self-rated papers, most of the No Position abstracts were rated as endorsing AGW. Interestingly, one of the criticisms of our research from those that dismiss the scientific consensus is to claim two thirds of climate abstracts state no position on global warming. What these critics fail to mention is that most of those two thirds of climate abstracts go on to endorse the consensus in the full paper.

How much impact on public belief in the scientific consensus is the tendency of the media to cover with equal weight/seriousness both climate science with lots of data and research behind it and climate speculation with almost no science

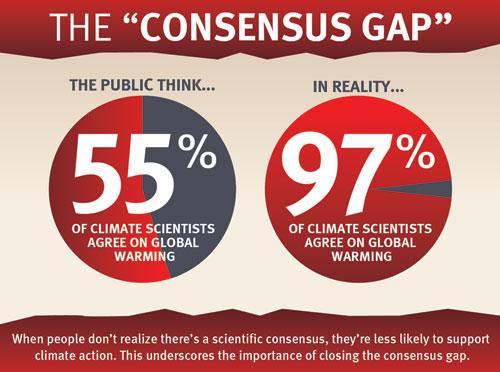

The way mainstream media covers climate change has had a significant impact on public belief. When the public are asked "how many climate scientists agree that humans are causing global warming?", the average answer is around 55%. The discrepancy between public perception and the actual 97% consensus is called the "Consensus Gap".

When mainstream media outlets cover the climate issue by pitting a scientist against a dissenter, they reinforce this misconception of a 50:50 debate. One study found that when the public view a media story on climate change covered in this fashion (scientist vs dissenter), the result is a lowered perception of scientific consensus and lowered support for climate action.

Is there an anti-intellectual component to public doubts about expert consensus?

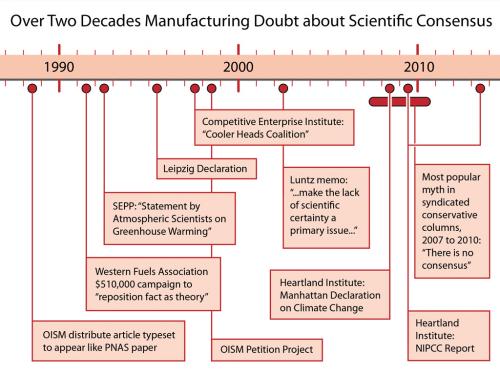

While the media's "balance as bias" tendency has contributed to the consensus gap, I think the greatest contributor has been a persistent misinformation campaign over two decades casting doubt on the scientific consensus.

There are two predominant motivations behind this misinformation campaign. One is vested interest, demonstrated in campaigns such as Western Fuels Association's campaign to reposition global warming fact as theory. The other is ideology, with right-wing ideological think-tanks featuring prominently in the misinformation campaign. Conservative ideology abhors the actions required to solve the global warming problem. Consequently, they deny that there is any problem in the first place. This drives the anti-science movement that rejects the scientific consensus on human-caused global warming.

Note: all the graphics from this post come from our resource of climate graphics which are freely available for republishing.

Arguments

Arguments

The question about the authors surveying only climate science journals kills me. Should fossil fuel trade magazines have been included? How about "Crackpot Quarterly"? / / When it comes to this question of whose opinion about climate change is worth considering, one might reflect upon the implications of the recent survey that discovered, among those who self-identify as Republican, almost twenty percent more believe in demonic possession than believe in anthropogenic global warming. Millions believe that Hell exists, that Satan lives there, that He periodically sends evil demons to earth to displace our souls from our bodies, THAT they believe. But the idea that dumping billions of tons of heat-retaining gas into the atmosphere will eventually warm that atmosphere? I mean come on, that's really far fetched . . .

Actually, kanspaugh, the survey did include some articles from trade journals, such as the Oil and Gas Journal as well as from non-climate science journals such as the Bulletin of the American Association of Petroleum Geologists. The criteria for inclusion in the survey were that the article be peer-reviewed and that the papers appeared using our search terms.