Changing Climates, Changing Minds: The Personal

Posted on 11 March 2012 by Andy Skuce

Nobody comes into this world with a fully-formed opinion on anthropogenic climate change. As we learn about it, we change our minds. Sometimes, changing your mind can be easy and quick; sometimes it’s hard and slow. This is an anecdotal and subjective account of the author’s changes of mind.

A goal of Skeptical Science is to change people’s minds, especially the minds of people who doubt the reality of man-made climate change. John Cook and Stephan Lewandowsky’s The Debunking Handbook provides a how-to and how-not-to resource for debunking myths and misinformation: a guide to changing people’s minds, based on published research in psychology.

In this article I’ll examine my own history and sketch out how I twice changed my mind on climate change; I’ll speculate on why one step was easy yet memorable while the other was hard work but forgettable.

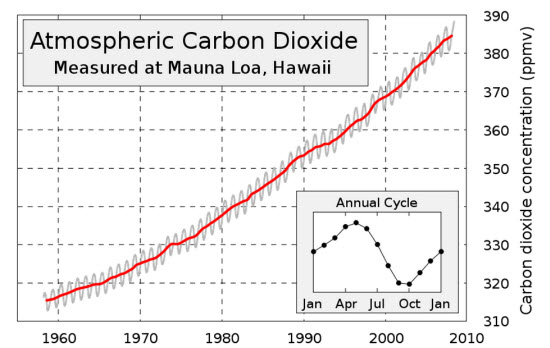

The Keeling Curve epiphany

You're only as young as the last time you changed your mind.

-Timothy Leary (via Stewart Brand)

My university education was in geology and geophysics. Earth Science students tend to have some fundamental concepts drummed into their skulls, none of them instinctive or common sense:

- Deep Time; the scarcely imaginable age of the Earth. (This and other geological ideas are beautifully described in John McPhee’s book Basin and Range.)

- Gradualism; even the largest features of the Earth, oceans and mountain chains, are the product of slow processes over long time frames. This contrasts with catastrophism, the idea that the Earth’s features formed by sudden and unusual paroxysms.

- Uniformitarianism; the processes that worked to shape the Earth are the same as those operating today. Often expressed in the phrase: The present is the key to the past.

Notions like these comprise a heuristic—a problem-solving framework—used to sort the most likely hypotheses from the least likely. Geologists are taught to be favourably biased towards explanations that are slow-acting and that involve commonplace mechanisms: to show a preference for the humdrum over the extraordinary.

I first started hearing about man-made climate change in the 1990’s, following the Rio Earth Summit. From my geologist’s perspective, it seemed immediately implausible that any significant global change could occur suddenly or be caused by just a couple of centuries of human activity. Nevertheless, I was curious enough to pick up a copy of a book by John Houghton: Global Warming, the Complete Briefing (second edition, 1997). At the time, Houghton was the Chairman of the IPCC.

Soon stumbling upon the Keeling Curve. I was initially puzzled by the annual saw-tooth pattern, which was nicely explained by seasonal growth cycles of plants. I noted the gradual upward climb in CO2 concentrations. Suspecting that this near-linear trend had been graphically amplified, I looked at the Y-axis and was surprised to see that from the 1960’s to the 1990’s the absolute atmospheric concentration of CO2 had increased by nearly 15%. Fifteen percent in thirty years! To a geologist, thirty years is not even an eye blink and a fifteen percent change in any important global parameter in such a short time is unprecedented.

Reading further, it became clear to me that the trend of CO2 increase was a result of our burning of fossil fuels and that— once it is understood that CO2 is a critical component of the greenhouse effect—continuing such rapid emissions would inevitably lead to changes in the climate.

Even though I now accepted the scientific reality of man-made climate change, coming to grips with the potential severity of climate change took much longer.

Overcoming lukewarm bias

When the facts change, I change my mind. What do you do, sir?

Reputedly said by the economist John Maynard Keynes, after having been criticized for changing his position on monetary policy during the Great Depression.

A friend recently reminded me of a long comment I had made in a private online forum in 2006, made in discussing a scientific associations’ planned public stance on climate change. Many of the people there were, I claimed, making biased and anti-scientific arguments against the basic chemistry and physics of increasing CO2 concentrations and climate change. But I also wrote this:

…I consider myself something of a climate change skeptic. There are valid scientific and economic reasons for advocating a do-nothing stance on climate change.

Thus, by accepting the basic science but by downplaying the consequences of climate change, I was adopting the position of a lukewarmer. That’s no longer my opinion and, until I reread that passage, I had forgotten I had ever held it.

It may be sufficient explanation for many readers to know that I was employed full-time for many years in oil exploration (I’m now mostly retired). While there’s an undeniable element of truth to that explanation, I think that the biggest factor that led me to minimize the effects of climate change was an inherent optimism bias, a tendency to discount threats and instead always look on the bright side. Having such a bias is generally a benefit in society and at work. When somebody makes a dire prediction, I reflexively think: “Don’t worry, things won’t be that bad” and immediately start looking for signs of pessimistic bias in them.

An example would be my reaction to watching the movie An Inconvenient Truth. It struck me that Al Gore was misleading in his portrayal of the amount of sea level rise to be expected over the twenty-first century, even if every word he spoke was true. Indeed, the people who went with me to the movie all came away thinking that a six-metre sea-level rise was imminent and they reacted in disbelief when I told them what the IPCC forecasts actually were. For me, at that time, the main lesson of the movie was that people sounding the alarm on climate change exaggerate. It took an effort for me to appreciate later that the IPCC had a conservative bias in its projections and that multi-metre sea level rises were within the range of scientific projections over the coming centuries (e.g., see fig 17, here).

After 2006, I took part in an internet discussion forum, initially adopting the role of agnostic on serious anthropogenic climate change, arguing with both extremes; let’s call them “alarmists and deniers”, for want of better labels. This experience was what eventually changed my mind on the seriousness of climate change and the idea that climate scientists had an alarmist bias. Every time I examined a denialist argument, a little research quickly convinced me that they were wrong; invariably their references were unreliable and their arguments incoherent. When it came to disagreeing with the alarmists, even if the worst outcomes they predicted were questionable and sometimes overstated, their overall case was coherent and based on solid references. Over a period of a few years, I drifted away from my lukewarmer stance. I can thank a handful of deniers for provoking me to do my homework, which helped me change my mind; but I don’t think I had any success in changing their minds.

The divided self: What was I thinking?

It is easy to see the faults of others, but difficult to see one’s own faults. One shows the faults of others like chaff winnowed in the wind, but one conceals one’s own faults as a cunning gambler conceals his dice. -Prince Gautama Siddharta (via Jonathan Haidt)

Why was it so easy, even a bit of a thrill, to overcome my geologist’s biases and to accept the basic physics of man-made climate change, when it was so difficult to overcome the optimistic bias that influenced me to believe that any change in climate wouldn’t be that bad? I’m not sure there’s an easy answer to that or that I'm the person best placed to provide it. But after reading some popular books on psychology, I would speculate that those two sets of biases were located in different parts of my brain: the geologist’s bias is a taught bias, lodged in the logical and conscious part of the brain and easily confronted, while the optimistic bias is innate and rooted in the subconscious.

Who’s in charge here? Source.

For those interested, all three of the following books are recommended.

- Nobel Prize winner, Daniel Kahneman’s book Thinking, Fast and Slow explores the division of the brain into “System 1” the fast-thinking intuitive part that does most of the work in running our lives and “System 2” the slow-thinking and energy-intensive part of our brain that we have to engage to solve difficult problems. System 1 works wonderfully well most of the time but its inbuilt pattern recognition mechanisms can lead us astray, for example, with optical illusions or probability problems. System 2 is clever but lazy, usually engaging after System 1 has done its work. It sometimes makes up stories to explain away System 1’s shortcomings, rather than attempting to correct them.

- Evolutionary biologist Robert Trivers’ book The Folly of Fools: The Logic of Deceit and Self-Deception in Human Life takes a radical view, arguing that deceit is everywhere in nature, and that it is an adaptive strategy employed by genes and individual plants and animals; expressed by orchids, angler fish and Bernard Madoff. We not only deceive our predators and prey, but also our spouses and relatives, and, especially, ourselves. The truth may set you free but deceit can get you ahead, and if you can fool yourself, then the better you are able to fool others.

- Psychologist Jonathan Haidt’s The Happiness Hypothesis has, among other things, a powerful metaphor for the divided human mind:

Modern theories about rational choice and information processing don’t adequately explain weakness of the will. The older metaphors about controlling animals work beautifully. The image that I came up with for myself, as I marveled at my weakness, was that I was a rider on the back of an elephant. I’m holding the reins in my hands, and by pulling one way or the other I can tell the elephant to turn, to stop, or to go. I can direct things, but only when the elephant doesn’t have desires of his own. When the elephant really wants to do something, I’m no match for him. Source

We shouldn’t be too surprised when we get things badly wrong and show bias. It’s in our nature. And if a climate skeptic doesn’t immediately accept a compelling argument or even a simple fact, it’s not necessarily because they are wilfully obstinate or dishonest. It is probably true that some diehard denialists have internal pachyderms with skins so thick that even sustained whacking from the mahout won’t ever get through. However, many skeptics should come around eventually—not because the advocates of the urgency of action on climate change are smarter or more persuasive or more virtuous than the doubters—but because the scientific consensus on man-made climate change is right. The scientific method, including the peer-review process, produces reliable knowledge precisely because it has been developed to overcome our natural human biases.

In the upcoming article Changing Climates, Changing Minds: The Great Stink of London, I look at how Victorian London came to grips with its human waste problem and compare that with the challenge we face in altering the political direction on climate change.

Arguments

Arguments

- AGW

- PO

- GFC MkII (MkIII etc)

- Overpopulation

Population growth underpins all the others, leading me to observe that there are just too many of us, even if (fond hope) we eventually get our emissions under control and develop an alternative to fossil energy. Perhaps nature will evolve a new pestilence to decimate us, perhaps starvation will decimate us under the impending food and energy supply insecurities, or perhaps we will decimate ourselves through war. All I am sure of is that our population will ultimately collapse and it will not be pleasant for the survivors. Alarmist or Realist? I think it is realistic to be somewhat alarmed about the path we are on. Perpetual growth is an economic nonsense. We would be wise to be planning now for a shrinking population and economic contraction, but which politician would want to be part of that picture?