What do we learn from James Hansen's 1988 prediction?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

|

Advanced

Advanced

| ||||

|

Hansen's 1988 projections were too high mainly because the climate sensitivity in his climate model was high. But his results are evidence that the actual climate sensitivity is about 3°C for a doubling of atmospheric CO2. |

|||||||

Climate Myth...

Hansen's 1988 prediction was wrong

'On June 23, 1988, NASA scientist James Hansen testified before the House of Representatives that there was a strong "cause and effect relationship" between observed temperatures and human emissions into the atmosphere. At that time, Hansen also produced a model of the future behavior of the globe’s temperature, which he had turned into a video movie that was heavily shopped in Congress. That model predicted that global temperature between 1988 and 1997 would rise by 0.45°C (Figure 1). Ground-based temperatures from the IPCC show a rise of 0.11°C, or more than four times less than Hansen predicted. The forecast made in 1988 was an astounding failure, and IPCC’s 1990 statement about the realistic nature of these projections was simply wrong.' (Pat Michaels)

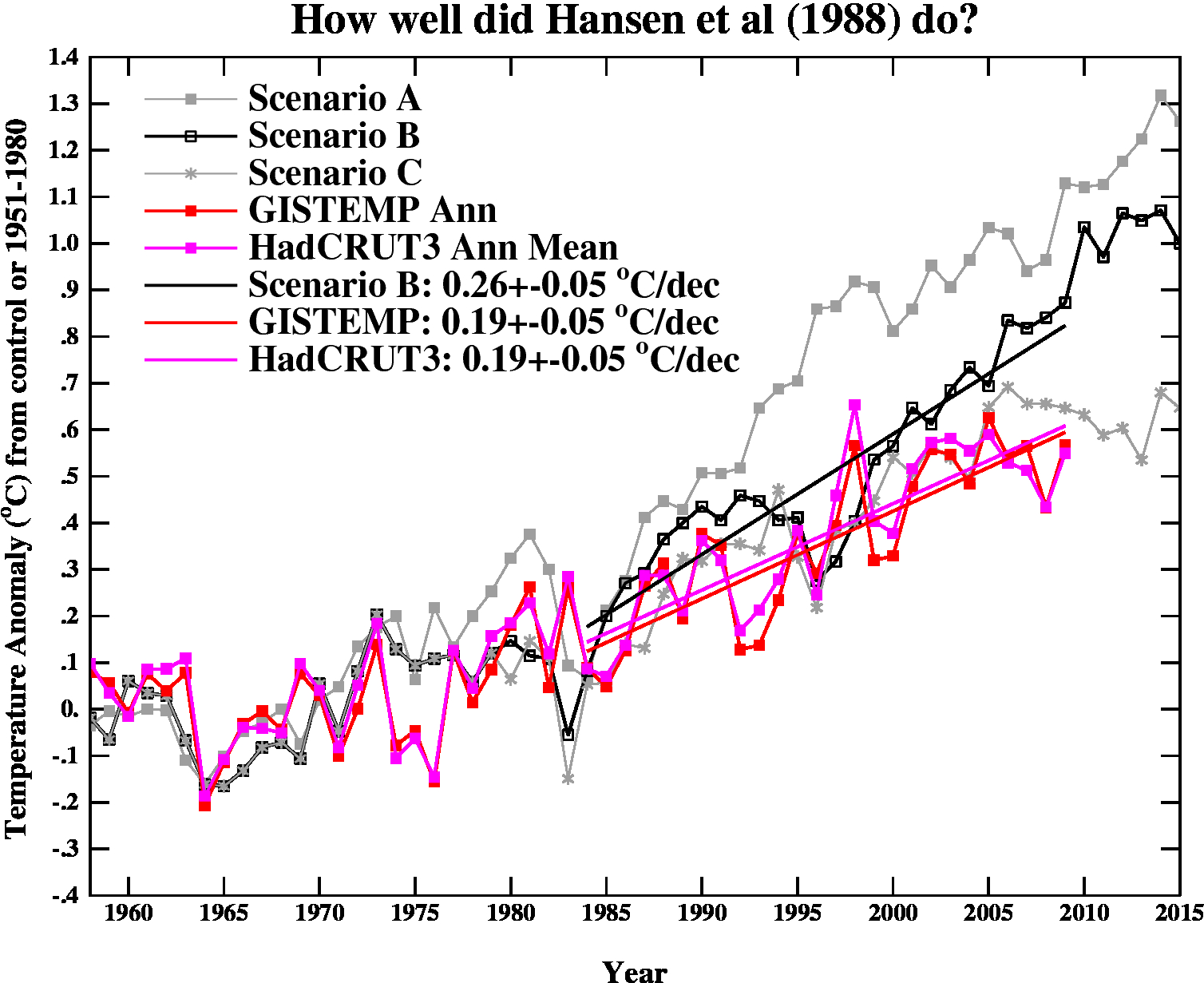

In 1988, James Hansen projected future temperature trends using 3 different emissions scenarios identified as A, B, and C. Scenario A assumed continued exponential greenhouse gas growth. Scenario B assumed a reduced linear rate of growth, and Scenario C assumed a rapid decline in greenhouse gas emissions around the year 2000 (Hansen 1988). As shown in Figure 1, the actual increase in global surface temperatures has been less than any of Hansen's projected scenarios.

Figure 1: Global surface temperature computed for scenarios A, B, and C, compared with two analyses of observational data (Schmidt 2009)

As climate scientist John Christy noted, "this demonstrates that the old NASA [global climate model] was considerably more sensitive to GHGs than is the real atmosphere." Unfortunately, Dr. Christy decided not to investigate why the NASA climate model was too sensitive, or what that tells us. In fact there are two contributing factors.

Radiative Forcing

A radiative forcing is basically an energy imbalance which causes changes at the Earth's surface and atmosphere, resulting in a global temperature change until a new equilibrium is reached. Hansen translated the projected changes in greenhouse gases and other factors in his three scenarios into radiative forcings, and in turn into surface air temperature changes. Scenario B projected the actual changes we've seen in these forcings most closely. As discussed by Gavin Schmidt and shown in the Advanced version of this rebuttal, the radiative forcing in Scenario B was too high by about 5-10%. Thus in order to assess the accuracy of Hansen's projections, we need to adjust the radiative forcing and surface temperature change accordingly.

Climate Sensitivity

Climate sensitivity describes how sensitive the global climate is to a change in the amount of energy reaching the Earth's surface and lower atmosphere (radiative forcings). Hansen's climate model had a global mean surface air equilibrium sensitivity of 4.2°C warming for a doubling of atmospheric CO2 [2xCO2]. This is on the high end of the likely range of climate sensitivity values, listed as 2-4.5°C for 2xCO2 by the IPCC, with the most likely value currently widely accepted as 3°C.

Since the Scenario B forcing was about 5-10% too high, its projected global surface air temperature trend was 0.26°C per decade, and the actual surface air temperature trend has been about 0.2°C per decade (NASA GISS), Hansen's climate model's sensitivity was about 25% too high. Thus the real-world climate sensitivity would be approximately 3.4°C to in order for Hansen's climate model to correctly project the ensuing global surface air warming trend. This climate sensitivity value is well within the IPCC range.

In other words, the reason Hansen's global temperature projections were too high was primarily because his climate model had a high climate sensitivity parameter. Had the sensitivity been approximately 3.4°C for a 2xCO2, and had Hansen decreased the radiative forcing in Scenario B slightly to better reflect reality, he would have correctly projected the ensuing global surface air temperature increase.

Spatial Distribution of Warming

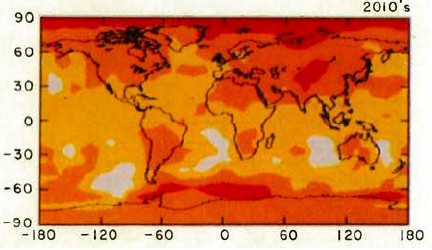

Hansen's study also produced a map of the projected spatial distribution of the surface air temperature change in Scenario B for the 1980s, 1990s, and 2010s. Although the decade of the 2010s has just begun, we can compare recent global temperature maps to Hansen's maps to evaluate their accuracy.

Although the actual amount of warming (Figure 3) has been less than projected in Scenario B (Figure 2), this is due to the fact that as discussed above, we're not yet in the decade of the 2010s (which will almost certainly be warmer than the 2000s), and Hansen's climate model projected a higher rate of warming due to a high climate sensitivity. However, as you can see, Hansen's model correctly projected amplified warming in the Arctic, as well as hot spots in northern and southern Africa, west Antarctica, more pronounced warming over the land masses of the northern hemisphere, etc. The spatial distribution of the warming is very close to his projections.

Figure 2: Scenario B decadal mean surface air temperature change map (Hansen 1988)

Figure 3: Global surface temperature anomaly in 2005-2009 as compared to 1951-1980 (NASA GISS)

Hansen's Accuracy

The appropriate conclusion to draw from these results is not simply that the projections were wrong. The correct conclusion is that Hansen's study is another piece of evidence that climate sensitivity is in the IPCC stated range of 2-4.5°C for 2xCO2.

Last updated on 25 November 2016 by dana1981. View Archives

Arguments

Arguments

A minor note, inspired by re-reading Myhre et al 1998 on the radiative forcing of various greenhouse gases:

The forcing from a change in CO2 is estimated as F = α * ln(C/C0) - this is a shorthand fit to what is calculated from a number of line-by-line radiative calculations.

The 1990 constant, which is what I presume Hansen used in the 1988 model, had a constant α = 6.3, while Myhre et al 1998, using better radiative estimates, has α = 5.35. And that value has been used ever since in modeling estimations.

I suspect that difference in estimating radiative forcing may be responsible for much of the 4.2°C/doubling sensitivity Hansen 1988 (over)estimated, as opposed to the roughly 3°C/doubling value used now.

Tamino has updated Hansen's 1988 prediction by swapping in actual values of forcings (except volcanic) more recently than was done by RealClimate seven years ago. The forcings are closest to Hansen's Scenario C forcings. So actual temperatures should have been closest to Hanson's Scenario C model projection. Guess what?

Several obvious problems here.

First, Hansen's 1988 presentation was about emissions, as the Congressional record clearly shows. Actual global emissions look like Scenario A (if mainly because of China). Yes, the forcings in the model depend on concentrations, but the fact that methane or CO2 concentrations didn't do what the model expected are failures of Hansen 1988 to accurately model the relationship of emissions to concentrations. This is part of what critics have accurately labelled the "three-card monte" of climate science: make a claim, then defend some other claim while never acknowledging the original claim was false. This is not how good science is done.

Second, the graph purports to measure whether Hansen 1988 made accurate predictions, but it doesn't start in 1988. To show the hindcast (which the model was deliberately tuned to!) is another kind of three-card monte.

One can quibble with the choice of the GISS surface temperature record, given that Hansen himself administered it, but the divergence from satellite doesn't really affect the conclusions, so suffice it to note the 1988 prediction looks slightly worse using UAH or RSS.

Another minor quibble is that the article is now somewhat out of date — we're well into the 2010s and the predicted warming is still not materializing. This also makes the prediction slightly more wrong.

Last, and most risibly, the resemblance of actual temperatures to Scenario C predictions is now being held up as vindication. Even if that were defensible on the merits, it would certainly have come as a great shock to Hansen and his audience in 1988. Imagine a time traveler arriving from 2014, barging into Senate hearings brandishing the satellite record and yelling "Hansen was right! Look, future temperatures are just like Scenario C!" The obvious conclusion would have been "oh good, there's no problem then."

As this is an advocacy site, I do not expect these errors to be corrected, I merely state them for the record so visitors to the site can draw their own conclusions.

TallDave, you say: "Actual global emissions look like Scenario A (if mainly because of China)."

Except, that if you read the link in the post directly above your own, you find information to the contrary. Perhaps you'd care to present your alternative data, that demonstrates that emissions(or even better, forcings) do in fact look like scenario A.

TallDave - Um, no. Emissions more closely tracked Hansens Scenario C in terms of total forcings, as shown here.

[Source]

Those emission scenarios were "what-if" cases (not predictions) of economic activity, which are not in and of themselves climate science. Any mismatch of between those scenarios and actual events would only be an issue if Hansen was writing on economics, not on climate. He could hardly have predicted the Montreal Protocols on CFC's, for example.

"suffice it to note" - Strawman WRT to satellite temps, as Hansen was discussing surface temperatures and not mid-tropospheric temperature; you're attempting to attack something that wasn't discussed in his paper.

Yes, that 1988 paper is now out of date. Amazingly, though, it's frequently brought up and attacked on 'skeptic' websites, as if it represented current information - so it's quite reasonable to discuss these attacks on SkS as the current denial myths they are.

The real question is whether the model performs well in replicating temperatures given particular forcings - and since it does, it was a pretty decent model. The predictions of the paper were not "either Scenario A, B, or C will occur", but rather "Given a set of stated emissions, here's what the temperatures are likely to be", establishing a relationship between concentrations of forcing agents, and temperatures.

The major problem with his 1988 predictions came down to slightly too high a sensitivity to forcings (4.2 C/doubling of CO2, rather than ~3.2). This was strongly influenced by the radiative models and available data of the time, with the simplified expression at the time for CO2 forcing being 6.3*ln(C/C0). That constant was updated a decade later by Myhre et al 1998 to 5.35*ln(C/C0). Hansen used the data he had at the time, and his sensitivity estimate was a bit high.

If Hansen had time-traveled a decade and used that later estimate, his input sensitivity would have been ~3.57 C/doubling of CO2 - and his emissions to temperature predictions for the next 25 years would have been astoundingly accurate.

Your comment is a collection of strawmen arguments, and rather completely misses the point of the Hansen 1988 paper. It drew conclusions regarding the relationship of greenhouse gases and temperatures, not on making economic predictions. If you're going to disagree with it, you should at least discuss what the paper was actually about.

Talldave @20 claims, "Hansen's 1988 presentation was about emissions, as the Congressional record clearly shows."

Conveniently he neglects to link to the congressional record, where Hansen says:

Curiously, he makes no mention of emissions at all, when enumerating his three conclusions.

Later, and talking explicitly about the graph from Hansen et al (1988) which showed the three scenarios, he says:

Hansen then goes on to discuss other key signatures of the greenhouse effect.

As you recall, Talldave indicated that Hansen's testimony was about the emissions. It turns out, however, that the emissions are not mentioned in any of Hansen's three key points. Worse for Talldave's account, even when discussing the graph itself, Hansen spent more time discussing the actual temperature record, and the computer trend over the period in which it could then (in 1988) be compared with the temperature record. What is more, he indicated that was the main point.

The different emission scenarios were mentioned, but only in passing in order to explain the differences between the three curves. No attention was drawn to the difference between the curves, and no conclusions drawn from them. Indeed, for all we know the only reason the curves past 1988 are shown was the difficulty of redrawing the graphs accurately in an era when the pinacle of personal computers was the Commodore Amiga 500. They are certainly not the point of the graph as used in the congressional testimony, and the congressional testimony itself was not "about emissions" as actually reading the testimony (as opposed to merely referring to it while being carefull not to link ot it) actually demonstrates.

Ironically, Talldave goes on to say:

Ironical, of course, because it is he who has clearly, and outragously misrepresented Hansen's testimony in order to criticize it. Where he to criticize the contents of the testimony itself, mention of the projections would be all but irrelevant.

As a final note, Hansen did have something to say about the accuracy of computer climate models in his testimony. He said, "Finally, I would like to stress that there is a need for improving these global climate models, ...". He certainly did not claim great accuracy for his model, and believed it could be substantially improved. Which leaves one wondering why purported skeptics spend do much time criticizing obsolete (by many generations) models.

Tristan/KR — as I pointed out in my original post, you're making the usual mistake of confusing "emissions" for "concentrations" or "forcings." Again, Hansen made predictions explicitly based on emissons to Congress, see his full remarks in the pdf below. Indeed, the purpose of the hearing was to persuade Congress to take action on emissions.

Emissions (especially of CO2) rose like Scenario A. Don't take my word for it, ask the EPA:

http://www.epa.gov/climatechange/images/ghgemissions/TrendsGlobalEmissions.png

Tom: Here is the entire text of Hansen's remarks. Note this passage:

"We have considered cases ranging from business as usual, which is Scenario A, to draconian emissions cuts, Scenario C..."

http://image.guardian.co.uk/sys-files/Environment/documents/2008/06/23/ClimateChangeHearing1988.pdf

Surely no one seriously argues there were draconian cuts in emissions after 1988.

"Which leaves one wondering why purported skeptics spend do much time criticizing obsolete (by many generations) models."

Obviously because they're the only ones that can be tested on any meaningful time scale. Contra this site, the ability of a model to hindcast a highly complex phenemonen gives little confidence in its forecast (something painfully well-known in other fields).

Tom C is not saying Hansen did not mention emissions, just that they were not central to Hansen's testimony, and did not figure into his main points which were about whether observed warming had happened and whether it could be linked to GHGs and therefore be tied to humans.

What matters in any climate model is the GHG forcing, which is a function of concentrations. Emissions affect that, but indirectly and in a lagged fashion, so the timing of emissions and their makeup affect the realized concentration.

Hansen could not know that methane would show it's odd pattern over time, that CFC's production would be curtailed by the Montreal protocol a few years later, and that the Soviet bloc would collapse, along with it's industry. Current emissions of CO2 in particular could be pretty high, but if the timing of those emissions was backloaded, you will not see the overall forcing.

You're argument for your fixation on old models doesn't ring true. More sophisticated GCMS produced later have plenty of new data they can be compared against. Nobody gauges what you can do with a current computer based on what the Commodore Amigo could do in 1988. That is a crazy idea.