Why were the ancient oceans favorable to marine life when atmospheric carbon dioxide was higher than today?

Posted on 12 November 2015 by Rob Painting

When we look back through the geological record, we see that for much of the last 500 million years there was an abundance of life in the oceans and that atmospheric carbon dioxide was much higher than today for the vast majority of that time. Though it may seem counterintuitive, especially considering that ocean pH was lower than present-day, the ancient oceans were generally more hospitable to marine calcification (building shells or skeletons of calcium carbonate) than they are now [Arvidson et al (2013)].

Numerous examples exist to support this, such as the enormous coccolith deposits that make up the White Cliffs of Dover in England. These tiny coccolith shells are made of calcium carbonate (chalk) and date from the Cretaceous Period (Cretaceous is Latin for chalk) about 145 to 65 million years ago - when atmospheric CO2 concentration was several times that of today. So conducive to marine calcification was the Cretaceous ocean that it also saw the emergence of giant shellfish called rudists as a major reef-builder.

Figure 1 - Rudist fossils dating from the Cretaceous Period. Marine calcification during this time of higher-than-present atmospheric CO2 concentrations was very clearly not a problem for this marine organism. Image from Schumann & Steuber (1997).

Given the relationship between the concentration of carbon dioxide in the atmosphere and the pH of the ocean, why are scientists concerned about falling ocean pH when it was lower for much of the last 500 million years? The simple answer is that these were not times of ocean acidification per se, and the key difference is in understanding the time scales and chemical processes involved. Ocean acidification only occurs when atmospheric carbon dioxide increases in a geologically-rapid manner because pH and carbonate ion abundance decline in tandem, and it’s the decrease in carbonate ions that makes seawater corrosive to calcium carbonate forms [Kump et al (2009)] . While the increase in dissolved CO2 and hydrogen ion concentration (falling pH) would have proven stressful for some ancient marine life, such as coral [Cohen & Holcomb (2009), Cyronak et al (2015)], the corrosive state of surface waters likely delivered the decisive blow.

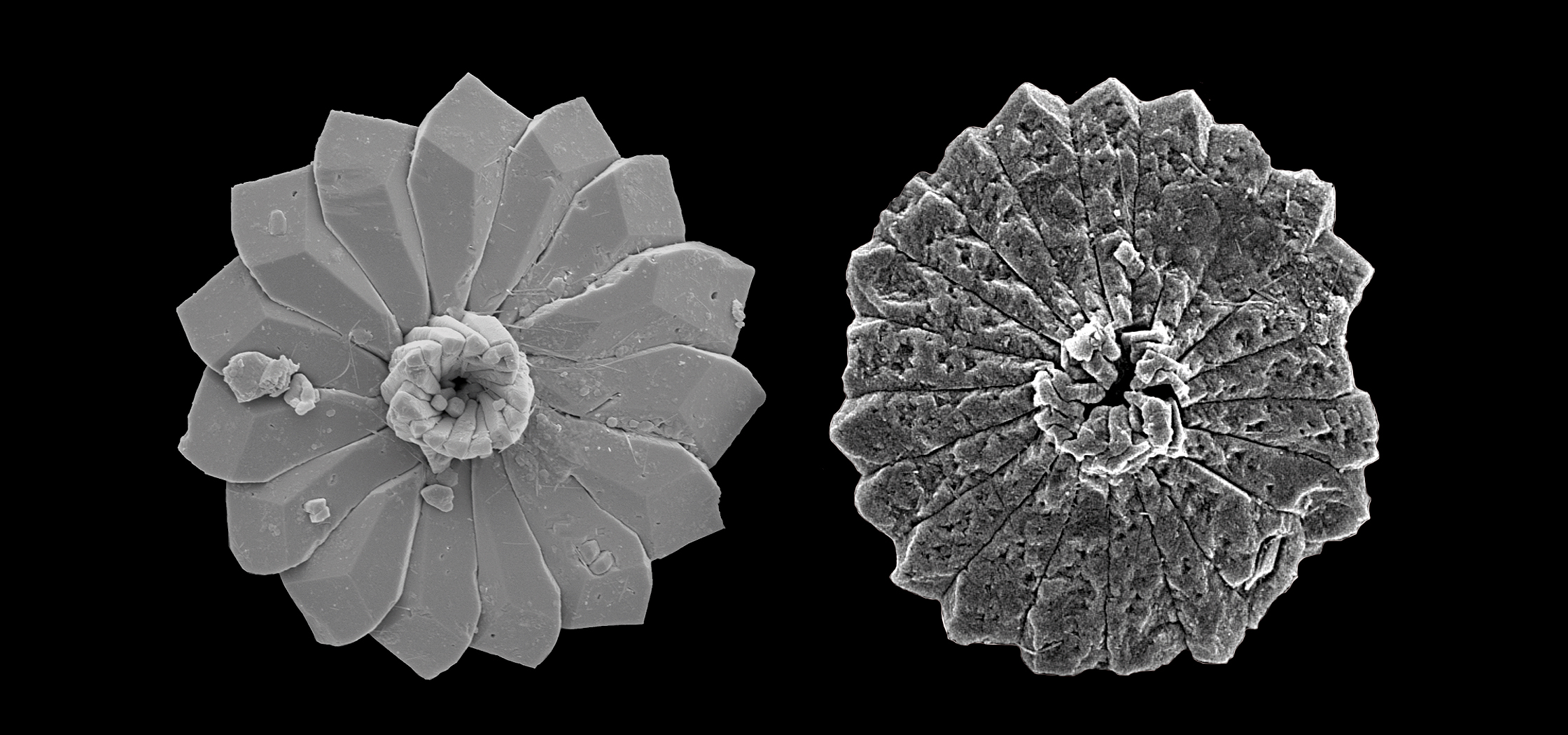

A perfect illustration of the marked difference between high and low carbonate saturation states is shown in Figure 2. Both marine fossils (discoaster) are from ancient periods of high atmospheric CO2 (i.e. low pH), however the fossil on the left pre-dates the Paleocene-Eocene Thermal Maximum (PETM) some 55-56 million years ago. Carbonate ions were abundant prior to the PETM, however a geologically-rapid pulse of CO2 entered the atmosphere during the PETM, and thus lowered the abundance of carbonate ions. The fossil on the right dates from the PETM and shows clear signs of dissolution, indicating that the oceans back then were corrosive to marine calcifiers, as we'd expect, because ocean acidification was underway (Penman et al (2014).

Figure 2 - Fossils of the marine calcifier Discoaster before (left) and during (right) the Paleocene-Eocene Thermal Maximum (PETM). The poor condition of the fossil from the PETM - exhibiting obvious signs of dissolution - is consistent with the ocean acidification event that accompanied the PETM. Image courtesy of Professor Patrizia Ziveri.

The Situation with Saturation

Extra carbon dioxide dissolving into the ocean sets off a number of reactions, the more well-known reaction is the increase in hydrogen ions which lowers ocean pH, but some of these extra hydrogen ions also react with carbonate ions in seawater to form bicarbonate. This reaction buffers ocean pH in that the uptake of hydrogen ions prevents pH falling to an otherwise lower level, but it has the unfortunate side-effect of decreasing the number of carbonate ions. This is important because a decrease in carbonate ion abundance also causes a decrease in the calcium carbonate saturation state [Raven et al (2005)].

One way to think about this is to consider that most chemical reactions go in both directions at once, but that one direction is more favored for a given set of conditions. With the formation of calcium carbonate in the modern ocean there is also a reverse reaction occurring but, at current equilibrium, calcium carbonate precipitation (formation of a solid in a solution) is the strongly preferred direction. So much so that calcification in modern-day seawater is relatively easy - a state known as supersaturation. Decreasing the abundance of carbonate ions dissolved in seawater begins to shift the balance of these chemical reactions. When the carbonate ion abundance falls to the point that dissolution of calcium carbonate is favoured over precipitation, the oceans are described as being undersaturated with respect to calcium carbonate.

So, irrespective of whether carbonate or bicarbonate is the chosen shell-making medium, under these conditions we expect to see calcium carbonate formation become increasingly more difficult until a point of dissolution is reached. What about calcium? Calcium is very clearly an important element in the formation of calcium carbonate but, unlike carbon, its concentration in seawater is determined by the slow rate of chemical weathering of surface rocks and is therefore very stable on the geologically-short time scales relevant to ocean acidification.

Chemical weathering and timescales

Over hundreds of thousands to millions of years, additional carbon dioxide is released into the atmosphere through volcanic activity and removed by weathering of silicate and carbonate rocks [Walker et al (1981)]. CO2 acts somewhat like a planetary thermostat, the Earth’s climate would gradually drift up or down by itself if these processes were not in approximate balance. Rock weathering takes place as a result of carbon dioxide in the atmosphere reacting with moisture to form a weak acid, known as carbonic acid, in rainwater. This slightly acidic rainwater reacts with surface rocks to liberate dissolved inorganic forms of carbon (DIC), bicarbonate and carbonate, which are then flushed via rivers into the oceans.

A rapid increase in atmospheric CO2, be that from intense and sustained volcanic activity or industrial fossil fuel emissions, throws the system out of balance because rock weathering is such a slow process. As carbon dioxide rapidly accumulates in the atmosphere, more dissolves into the ocean lowering both ocean pH and carbonate ion abundance. Eventually, the surface oceans can become corrosive and this is why ocean acidification is linked to some of the major extinction events in Earth’s past - these corrosive waters are implicated as a marine kill mechanism [Veron (2008), Kiessling & Simpson (2011), Payne & Clapham (2012) and Clarkson et al (2015)].

On the time scale of tens of thousands to hundreds of thousands of years, however, the climate system works to restore dissolved inorganic carbon back to the ocean - elevating the abundance of carbonate ions and thus raising the calcium carbonate state of ancient seawater. So how does this work? Raising the concentration of carbon dioxide in the atmosphere also raises atmospheric temperature. A warmer atmosphere holds more moisture, which in turn leads to more intense downpours and a global increase in the rate of rock weathering over time. As the rate of dissolved inorganic carbon being flushed into the ocean increases, so too does the calcium carbonate saturation state.

Experiments with the GENIE Earth system model [Honisch et al (2012)] show the result of increasing atmospheric CO2 on ocean pH and saturation state for aragonite, a common form of calcium carbonate in the modern ocean, at various timescales. With a doubling of carbon dioxide from 278 ppm (pre-industrial) to 556 ppm, pH decreases markedly regardless of the timescale, however saturation state only changes significantly at shorter timescales, and the response diminishes at progressively longer ones. All scenarios eventually lead to the overcompensation of saturation state at the 100,000-year timescale due to the oversupply of dissolved inorganic carbon from weathering.

Figure 3 - Simulations with the GENIE Earth system model reveal the progressive disassociation of pH and carbonate (aragonite) saturation at increasing timescales - the result of the slow process of chemical weathering of rocks. Note that each vertical line of the graph represents a tenfold increase in the timescale. Image adapted from Honisch et al (2012).

Comparing apples with apples

A common myth about ocean acidification is that high atmospheric CO2 and abundant marine life in Earth’s ancient past present some kind of paradox for scientists, however this myth is based on a misconception of ocean acidification. It is the rapid accumulation of atmospheric carbon dioxide, and the lowering of carbonate ion abundance which results from this, that makes the ocean become corrosive to calcium carbonate. The ocean remains an alkaline solution despite the fall in pH. An analogue for present-day ocean acidification are ancient periods when carbon dioxide in the atmosphere rose sharply so that it outpaced the rate of rock weathering, not intervals when atmospheric CO2 was elevated for long periods of time. Although a reasonably new area of research, ancient ocean acidification events appear to be connected with extinctions and crises amongst marine calcifiers.

Ocean acidification is deadly serious

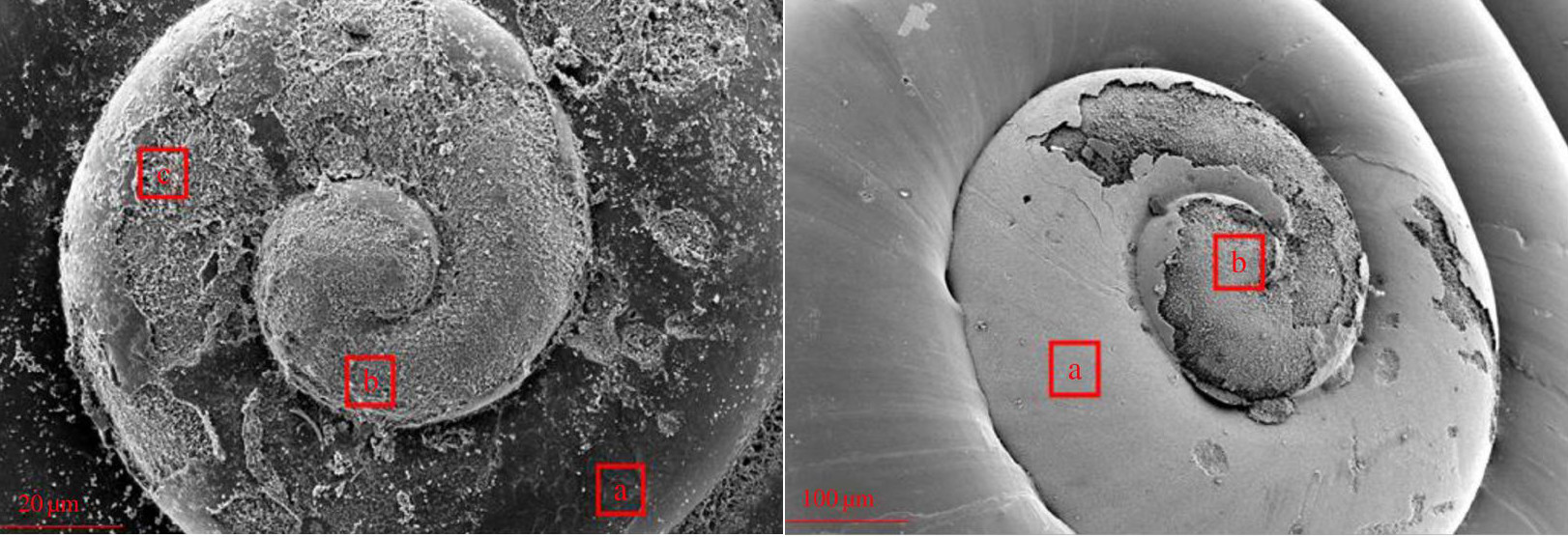

With an appreciation of the mechanism of silicate and carbonate rock weathering we can now see how the past, and observations in the present, make sense, and why ocean acidification is a threat to marine organisms like coral [Hoegh-Guldberg et al (2007)]. Calcifying marine species in parts of the ocean today which become periodically carbonate undersaturated [Bednarsek et al (2012), Barton et al (2012), Bednarsek et al (2014)], and those in laboratory experiments exposed to carbonate undersaturation [Waldbusser et al (2015)], dissolve because seawater has become corrosive. The same occurred during acidification events in the ancient ocean. During periods when atmospheric CO2 was elevated on geologic time scales, however, calcification posed few problems for ancient marine life because rock weathering supplied sufficient dissolved inorganic carbon back to the ocean to keep the abundance of carbonate ions elevated. Gradual change would have also allowed more time for marine species to adapt to the lower pH and higher dissolved CO2 concentrations in ancient seawater.

Figure 4 - Pteropod shells taken from the California Current in 2011. This is a region of seasonally carbonate undersaturated seawater. The shell on the left is from the more corrosive coastal surface waters, and the one on the right from further out to sea - where saturation state is higher. Image adapted from Bednarsek et al (2014).

One important aspect to note about acidification in the ancient ocean is that it was probably much slower than the current episode. As far as we can tell, looking back over the last 300 million years [Zeebe (2012), Honisch et al (2012)], the oceans have never become as corrosive as quickly as they are doing so now. The speed at which this ‘pulse’ of fossil carbon is being injected into the atmosphere is so extraordinary that it far exceeds even the greatest extinction event in Earth’s history, the Permian-Triassic extinction - when over 90% of marine species perished. Although a direct comparison with these ancient extinction events is not possible because they involved a much larger pulse of carbon taking place over tens of thousands of years, the inference that many ancient marine life could not adapt to changes occurring on millennial time scales highlights the danger of acidifying the oceans over the course of several hundred years - as we are currently doing.

For more information about ocean acidification see the OA Not OK series on the left-hand side of the page.

Help us do science! we’ve teamed up with researcher Paige Brown Jarreau to create a survey of Skeptical Science readers. By participating, you’ll be helping me improve SkS and contributing to SCIENCE on blog readership. You will also get FREE science art from Paige's Photography for participating, as well as a chance to win a t-shirt and other perks! It should only take 10-15 minutes to complete. You can find the survey here: http://bit.ly/mysciblogreaders. For completing the survey, readers will be entered into a drawing for a $50.00 Amazon gift card, as well as for other prizes (i.e. t-shirts).

Arguments

Arguments

" As far as we can tell, looking back over the last 300 million years"

When I see these kinds of claims, I always want to know--was there a more rapid acidification event 301 million years ago? Or is this just as far back as we can accurately reconstruct acidification events at this point?

Does anyone know which of these scenarios we are dealing with here?

Sorry, but your question asks the impossible because 300 million years is so big compared to anything we as humans have experienced.

Its the same scale as saying something happend 1 year ago, and then asking about a year and 1/10th of a second ago.

Geologic time scales are hard to comprehend and of course can only be very general and never down to the nearest year. I'd suggest that the "error" is a few tens of millions of years, but will be happy to be corrected by somenone with more expertise than me.

...by definition the error is atleast tens of millions of years assuming an acceptable error value of +/- 5%!

bozza @3, geological events are not timed based on "acceptable error values". They are timed using the means available to as high an accuracy as is technically feasible. Errors in the hundreds of thousands of years in the mid phanerozoic are achievable in some circumstances (example), and of one to three million years in many circumstances (example).

Further, uncletimrob's claim that dating uncertainty makes answering the question in the OP's title impossible is simply false. First, the answer has been given by detailed computer modelling as shown in Fig 3. Second, the answer has been confirmed by a large number of emperical studies where relative dating based on stratigraphy is sufficient to distinguish later from earlier times and provide an answer, even if precise absolute dating is impossible.