The Other Bias

Posted on 15 November 2013 by Kevin C

Looking at three versions of the global surface temperature record, with their different behaviour over the last decade and a half, it is only natural to wonder 'which is right?'.

To answer this question, we need to know why they differ. And the first place to look is the known sources of bias which impact the different versions of the temperature record.

One source of bias - due to poor observational coverage - has been discussed in our recent paper, although it was reported back in 2009, and it was addressed by NASA as long ago as 1987.

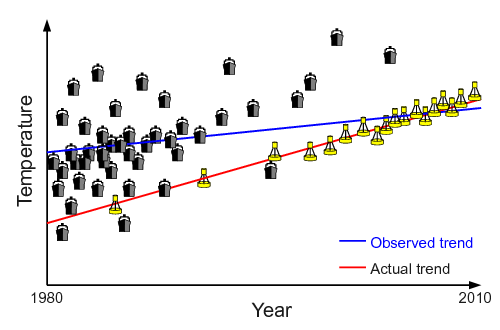

The other source of bias comes from a change in the way sea surface temperatures (SSTs) are measured. In the 1980's most SST observations were from ship engine room water intake sensors, however over the last two decades there has been a shift to using observations from buoys. The ship-based observations are subject to biases which depend on a number of factors including the design of the ship, but are on average slightly warm. As a result the raw observations can provide a misleading trend for short periods spanning the changeover, such as the last 15 years. The problem is illustrated in the Figure 1.

Figure 1: How a transition from ship to buoy measurements can bias temperature trends. This image is illustrative and does not represent the actual trends in observation types, which are more complex.

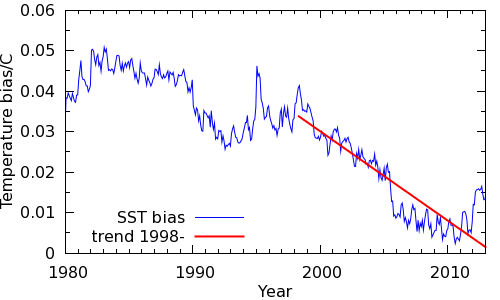

The UK Met Office have developed a very sophisticated analysis to address these biases. The resulting corrections are included in the HadSST3 sea surface temperature record, but these corrections have not yet been applied to the SST records used by NASA or NOAA. The bias in the other versions of the global temperature record due to change in observational platforms can be estimated from the difference between the raw and adjusted HadSST3 data, and is shown in Figure 2.

Figure 2: Impact of SST bias on the global (i.e. land/ocean) temperature record estimated from the HadSST3 data.

We can see that the change in observational methods will tend to suppress temperature trends starting in the mid 1990's and running to the present. Of particular interest is the coverage and SST bias in trends for different start dates, shown in Table 1. The second column gives the bias in the global trend due to coverage. The third gives the bias due to the change in SST observational method.

| Start year | Coverage bias (°C/dec) | SST bias (°C/decade) |

| 1990 | -0.020 | -0.014 |

| 1991 | -0.020 | -0.015 |

| 1992 | -0.027 | -0.016 |

| 1993 | -0.030 | -0.018 |

| 1994 | -0.034 | -0.019 |

| 1995 | -0.036 | -0.021 |

| 1996 | -0.039 | -0.021 |

| 1997 | -0.055 | -0.022 |

| 1998 | -0.058 | -0.022 |

| 1999 | -0.056 | -0.021 |

| 2000 | -0.055 | -0.020 |

| 2001 | -0.057 | -0.020 |

| 2002 | -0.056 | -0.018 |

| 2003 | -0.083 | -0.015 |

| 2004 | -0.081 | -0.011 |

| 2005 | -0.045 | -0.004 |

Table 1: Trend bias by starting date due to coverage and SST bias.

Note that trends starting in 1997 and 1998 are most biased by the change in observational method. The striking thing about this result is that these are the same trends which are most impacted by coverage bias.

Every version of the temperature record is affected by at least one of these sources of bias, as shown in the Table 2.

| Coverage bias | SST bias | |

| Met Office | X | |

| NASA | X | |

| NOAA | X | X |

Table 2: Biases present in the main versions of the temperature record.

We can produce an initial estimate what GISTEMP would look like if it included the HadSST3 bias corrections by taking the difference between the raw and adjusted HadSST3 data, weighting each grid cell by ocean fraction, and applying the resulting shifts to the GISTEMP blended data. The 16 year trends for this data and for the published records are given in Table 3. The corrected GISTEMP trend is very close to the trend for the infilled HadCRUT4 data from Cowtan & Way (2013).

| Dataset | Trend °C/decade |

| HadCRUT4 | 0.046 |

| GISTEMP | 0.080 |

| GISTEMP+SST adj. | 0.103 |

| HadCRUT4 infilled | 0.108 |

| HadCRUT4 hybrid | 0.119 |

Table 3: 16 year temperature trend comparison (1997/01-2012/12).

At the start of the article I asked which existing version of the temperature record was 'right'. If both our paper and the HadSST3 paper are correct (and both are single studies which require replication), then the surprising answer is 'none of them'. All suffer from one or more sources of bias which have been suppressing temperature trends since around 1998, i.e. the start of the purported 'pause' in global warming. We anticipate that the coverage and SST biases account for most of the difference between the different temperature records over recent decades.

This obviously has implications for some papers on recent temperature trends, although the better papers which compare coverage-masked model outputs against HadCRUT4 are largely unaffected. This once again highlights the problem of drawing conclusions from short term trends.

Arguments

Arguments

Hi guys, interesting article, but raises one new question to me: These analyses show that observed surface temperature trend is larger than observed. Now, the question is: what about satellite data, as their trends are slighly lower than that of surface data. Is this due to different thing being measured or are there other biases in satellite data or after all, there are some other correction should be made to surface data ?

MP3CE - Most likely a low bias in the satellite records, as discussed here. Satellite temperature estimates are quite complex, and there have been repeated corrections to the data sets as various biases are found. Also see Po-Chedley and Fu 2012 for some specific UAH discussion.

See also the 2006 US Climate Change Science Program "Temperature Trends in the Lower Atmosphere - Steps for Understanding and Reconciling Differences", authored in part by Dr. John Christy (of the UAH data). Executive Summary pg. 10 notes that

Christy, however, does not mention this report anymore, insisting instead that the satellite data is the most accurate measure possible... perhaps a bit of bias on his part?

Great article. I like a lot about figure one--it really gets across what the problem is quite clearly, once you think about it for a moment. But I'm wondering why there are no units on the y axis. If these are taken from real studies, it shouldn't be that hard to add.

It makes me less likely to use this otherwise wonderful graph in explaining the issue to, for example, student, or from sharing it on other blogs, since the same criticism will likely be raised and I will be left with no answer (unless, of course, I am missing something blindingly obvious to everyone else...wouldn't be the first time '-) ).

My other suggestion for making that graph even stronger is to draw another red line (or another color, if you wish, with a legend to mark it) showing how the ship-alone data, too, goes up faster than than the combined set (blue line) does (unless my eyeballing calculation is off).

Anyway, thanks again and congrats on another great article.

Wili: It would be nice to do something like figure 1 with real data, but to do that we would have to reproduce the HadSST3 calculation, which is a really complex piece of work. And having done that it would probably be impossible to interpret visually because you would have the weather signal superimposed on top of the trend.

You could complement the illustration with some figures compiled from the real data from this article. (Which I suspect is the least-read of my contributions here, although as a piece of science journalism I think it is my best work.)

Hi

Note that trends starting in 1997 and 1998 are most biased by the change in observational method. The striking thing about this result is that these are the same trends which are most impacted by coverage bias

2003 and 2004 seem to exceed 1997/8. Perhaps they should read -.053 and -051 ?

[JH} The replication of the OP and the comment thread has been deleted. There is no need to copy and paste either one into a comment.

Just below Figure 1 a paragraph begins "The UK Met Office have developed a very sophisticated analysis to address these biases."

"very sophisticated analysis" is hyperlinked to the main page of Skeptical Science. Two places that seem more appropriate are:

First Look at HadCRUT4

Posted on 18 April 2012 by dana1981

http://www.skepticalscience.com/first-look-at-hadcrut4.html

HadCRUT4: A detailed look

Posted on 22 May 2012 by Kevin C

http://www.skepticalscience.com/hadcrut4_a_detailed_look.html

The second seems especially relevant. I would leave Figure 1 as is, then hyperlinks "more complex" in the caption to figure 1 to the first and "a very sophisticated analysis" to the second.

I believe this would go a long way to addressing the concerns wili expressed in comment 3.

Kevin, The bias that you have isolated accurately is the one during WWII. I agree that a measurement error of about +0.1C occurs between 1940 and 1945. It is not clear whether the time series such as GISTEMP actually correct for this. I have been doing my own time-series "reanalysis" via what I refer to as the CSALT model. This recreates the temperature record via non-temperature measurements such as CO2, SOI, Aerosols, LOD, and TSI (thus the acronym).

What I find is that there is a significant warming spike during the WWII years which I correct below. The amount of correction is 0.1C, and when I apply that the model residuals trends more to white noise over the entire record.

The third paragraph states, "One source of bias - due to poor observational coverage - has been discussed in our recent paper, although it was reported back in 2009, and it was addressed by NASA as long ago as 1987."

"Our recent paper" is hyperlinked to the main page of Skeptical Science. I believe you mean to link to:

Coverage bias. The HadCRUT4 and NOAA temperature records don’t cover the whole planet. Omitting the Arctic in particular produces a cool bias in recent temperatures. (e.g. Hansen et al 2006, Folland et al 2013). The video avoided this problem by using GISTEMP. However the issue affects the Foster and Rahmstorf analysis of the other records.

Has the rate of surface warming changed? 16 years revisited

Posted on 21 May 2013 by Kevin C

http://www.skepticalscience.com/has_the_rate_of_surface_warming_changed.html

... although:

The 2012 State of the Climate is easily misunderstood

Posted on 24 October 2013 by MarkR

http://www.skepticalscience.com/2012_soc_misunderstood.html

... may also be of some value.

WebHub Telescope, the issue of switching from intake water to buckets is covered here:

What caused such a dramatic drop in SST in 1945? In the wartime years leading up to 1945, most sea temperatures measurements were taken by US ships, who measured the temperature of the intake water used for cooling the ship's engines. This method tends to yield higher temperatures due to the warm engine-room environment. However, in August 1945, British ships resumed taking SST measurements. British crews collected water in uninsulated buckets. The bucket method has a cooling bias.

A new twist on mid-century cooling

Posted on 2 June 2008 by John Cook

http://www.skepticalscience.com/A-new-twist-on-mid-century-cooling.html

... and as you indicated it is a 1940s issue.

The problem involving the switch to buoys is more recent. It is introduced here:

Ships and buoys made global warming look slower

26 November 2010 by Michael Marshall

http://www.newscientist.com/article/dn19772-ships-and-buoys-made-global-warming-look-slower.html

Met Office to revise global warming data upwards

Leon Clifford, 26 Nov 2010

http://www.reportingclimatescience.com/news-stories/article/met-office-to-revise-global-warming-data-upwards.html

The issue is identified here:

Kennedy, J. J., R. O. Smith, and N. A. Rayner. "Using AATSR data to assess the quality of in situ sea-surface temperature observations for climate studies." Remote Sensing of Environment 116 (2012): 79-92.

http://hadleyserver.meto.gov.uk/hadsst3/RSE_Kennedy_et_al_2011.doc

... and receives some mention here:

Kennedy J.J., Rayner, N.A., Smith, R.O., Saunby, M. and Parker, D.E. (2011b). Reassessing biases and other uncertainties in sea-surface temperature observations since 1850 part 1: measurement and sampling errors. J. Geophys. Res., 116, D14103, doi:10.1029/2010JD015218

http://www.metoffice.gov.uk/hadobs/hadsst3/part_2_figinline.pdf

Additionally, NASA's approach uses a method of interpolation, and the somewhat more sophisticated kriging used in this more recent paper, which doesn't infill from satellite data per se, but uses how satellite data and surface temperatures are correlated over the ranges for which surface temperature measurements are available to infill where they are absent, appears more accurate.

Please see:

Global Warming Since 1997 Underestimated by Half

Filed under: Climate Science Instrumental Record — stefan @ 13 November 2013

http://www.realclimate.org/index.php/archives/2013/11/global-warming-since-1997-underestimated-by-half

For more information on the new paper, might try:

Coverage bias in the HadCRUT4 temperature record

Kevin Cowtan and Robert Way

http://www-users.york.ac.uk/~kdc3/papers/coverage2013/

... and in particular, the background:

http://www-users.york.ac.uk/~kdc3/papers/coverage2013/background.html

Kevin C., somehow it escaped me that you are the Kevin Cowtan that coauthored the paper with Robert Way. Congratulations! I am looking forward to reading the paper.

TC,

I am looking at a very restricted interval during the war. KevinC posted this chart below in a previous SkS article which shows that ships were not using trailing buckets as long as U-boats were on the loose :

My point is that this interval is exactly coincident with the only residual epistemic noise spike that I see when comparing my model to the data.

I am not certain which time series deal with this correctly.

WebHubbleTelescope:

There are two impacts of the HadSST3 corrections compared to earlier versions which only handled the 1942 discontinuity - the sharp correction in 1945, and the more gradual recent bias. In this article I mainly focus on the second.

I also managed to identify the post-war spike by the trivial method of comparing colocated coastal land and SST measurements. The results give a surprisingly good fit to the HadSST3 adjustments, barring a scale factor. The gradual change over the past couple of decades is far harder to check, although I may have picked up a weak echo of the signal in the constrast between sea-lane and non-sea-lane temperatures.

Penchant: The numbers are right. In our paper we also look at the effect of bias on the significance of the trends, which is maximised for 1997/1998. The suggestion that trends starting in 1997/1998 are most misleading is based on this result.

Lay people do seem to have an instinctive grasp of the idea that longer term trends carry more information, and so 'misleadingness' needs to be evaluated against this. Lacking a cognitive model of how people evaluate trend claims the 'impact on significance' metric was the best we could do.

KC,

I assume the HadSST2 is the time series with the least amount of corrections.

This is what my inverted model residual looks like when compared to your fractional contribution measurement profile. Note that the Korean War was between 1950 and 1953 which might have been a time when not to use trailing buckets.

And then the issue of insulated vs uninsulated buckets....

[RH] Modified image width to 550px.

WebHub, my apologies. I misunderstood the nature of your comment.

WHT: That looks very plaisible indeed. HadSST2 corrects up to the start of war discontinuity, but doesn't go deep enough and there are no corrections after that. So the features are exactly what I would expect with the exception of the 1950's feature that you've noted.

I presume you get an absolute magnitude out? If so I wonder if this is publishable as an independent test of the HadSST3 work.

Kevin, My correction is that the GISS temperature anomaly is 0.1C too warm for the years 1941 up until the end of 1945. A more complete description here:

http://ContextEarth.com/2013/11/16/csalt-and-sst-corrections